GPower case example is useful for many different data scientists, even those who are very familiar with GPower. GPower is easy to use for sample size calculation (and also free to download!). The challenge lies in choosing what inputs to enter into GPower, selecting what exact functions in GPower to use for your situation, and documenting the resulting sample size calculations for the various scenarios you envision.

GPower case example I’m presenting here does NOT attempt to show you how to use GPower for calculating sample size in general. That would require a course in itself, because GPower has so many possible functions and options. It is really an amazing software! Instead, I just want to show you an example of when I worked with a Principal Investigator (PI) on a project, and we first had to choose an outcome measurement for the study (which had two options). Once we did that, we had to use a measurement of the selected outcome in GPower to calculate how many participants we needed in each group in our study. You will see that above in my recorded livestream,

GPower Case Example Scenario

Gingivitis

Gingivitis is a condition where the gums in the mouth become inflamed and bleed. The oral health practitioner can tell this by probing the gums with a dental probe; if they bleed, then it is called “bleeding on probing” (BOP), and it is a sign of inflammation and gingivitis. To calculate BOP, oral health practitioners probe multiple sites on a patient’s mouth, then calculate the proportion (or percentage) of sites that bled upon probing.

Treatment

The standard treatment for gingivitis is regular use of chlorhexidine mouthwash (abbreviated CX). It works very well, but it has alcohol in it, so some patients do not like using it. Also, it stains the teeth.

So the PI, who is an innovator, developed a natural mouthwash alternative to CX called NSM. Our goal in this study was to test NSM and see if it performed at least as well as CX (if not better) in the treatment of gingivitis. Therefore, after enrolling participants, we’d randomize them double-blind style to either NSM or CX. We were able to do this design because the NSM and CX appeared identical, and we knew we could get away with a double-blind if we prepackaged the mouthwash, and labeled it with study ID numbers for the randomization.

Study Visits, Outcome Measurement and Statistical Approach

Our study would have several visits.

- At baseline (Visit 1, or V1), we’d measure an outcome we chose that we are trying to impact with CX and NSM that is a marker of gingivitis.

- We thought we’d use BOP, as is standard in studies, which has a range of 0 to 1.0 (if using the proportion version).

- But we wanted to also try another experimental measure we developed, which has a range of 1 to 25.

- The participant would undergo treatment after Visit 1, and finally, on the last visit, Visit 3 (V3), we would measure the outcome again.

To evaluate the outcome, we’d use a paired t-test between the measurement at V1 and at V3. If that was statistically significant at p < 0.05 (i.e., we set α at 0.05), then we’d say the treatment worked (that is, if the BOP or other measure decreased – not increased – to cause the statistical significance!).

However, that was not our main outcome. We were looking for at least equivalence, so difference in change in BOP was our main outcome. This made the power calculation more challenging, because we didn’t want to have to get too many people to show differing effect sizes that we could not defend. This was a clinical setting, and the difference between 20 and 25 participants mattered in terms of cost, time duration, and other resources.

So therefore, we just calculated how many people we thought we’d need to show a difference if there was one from V1 to V3 in each group (i.e., paired t-test independently for both groups). Here was our rationale:

- Given CX’s track record, we felt that it would definitely be statistically significant, even at a small level of sample, especially if we chose the outcome of BOP (as this was demonstrated over and over in the literature).

- If NSM was also statistically significant at the same small level of sample, then we could compare this to CX, and see if the changes were clinically comparable.

- But if NSM was not statistically significant, then there is no point in pursuing it. We will stay with CX!

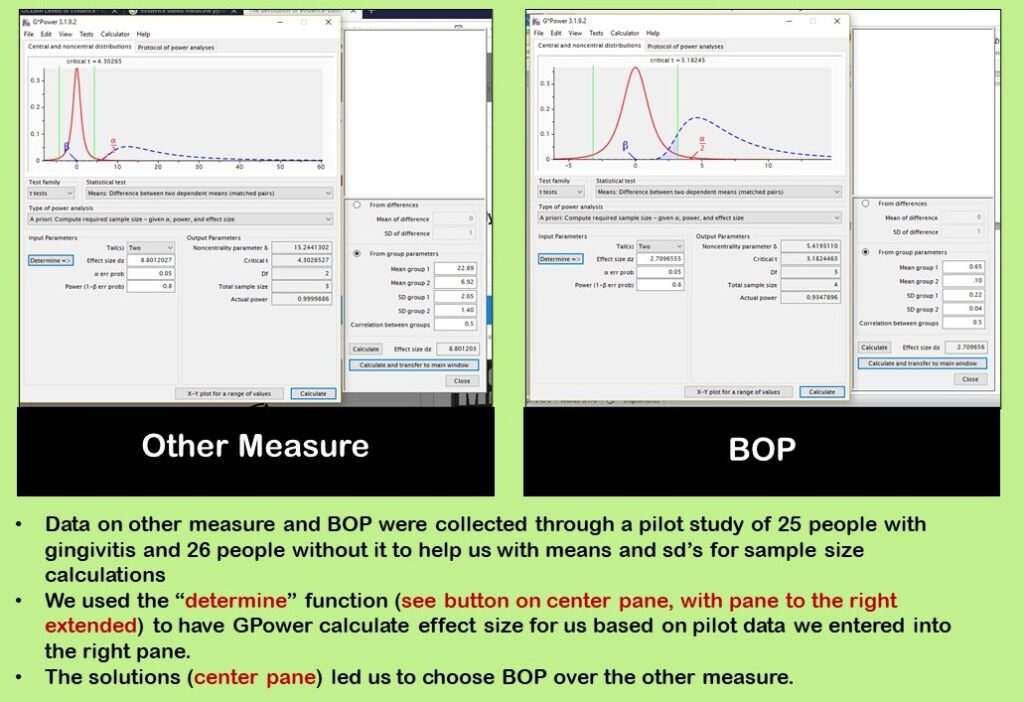

Pilot Study to get Estimates

The PI did a pilot study where she measured 25 people with gingivitis and 26 people without it, and gave me the mean and standard deviation (sd) for each group. We could now use that in GPower to make our estimates.

How We Did the GPower Case Example Calculation

In the main window, our specifications were:

Test family

T-tests.

Statistical test

Means: Difference between two dependent means (matched pairs)

Type of power analysis

A priori: Compute required sample size – given α, power, and effect size

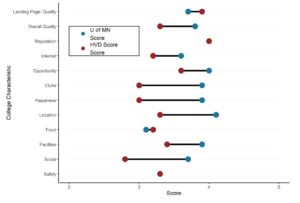

As you can see in our diagram, we used the “determine” function to calculate effect size. Effect size (“Effect size dz”) has to be included in the center pane because the calculation says “given α, power, and effect size”. We already chose α = 0.05 and power = 0.80, so we needed effect size.

The “determine” button makes the right panel extend out. In the right panel, we entered data from our pilot study. We did a separate estimate for the other measure and for BOP as shown in the diagram. After entering data in the right panel, we clicked “calculate and transfer to main window” which is a button at the bottom of the right panel. This populated the “Effect size dz” field which was empty. Now, we could click “calculate” and get our estimate.

As you can see in our diagram, we looked at using BOP as well as the other measure. As a result of our pilot study and calculations, we chose to use BOP as the outcome, so we went on to calculate sample size for BOP.

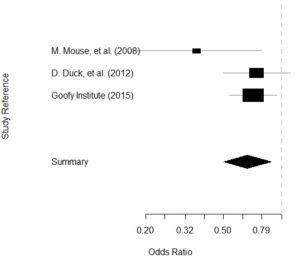

Documenting Sample Size Estimates from GPower Case Example

For documentation (data curation files) on this project, I kept:

- A Word document, with notes and actual screen shots from GPower that documented which settings I used for my queries, and

- A spreadsheet with multiple tabs. I made an example for you to download here, and these screen shots below are taken from this example.

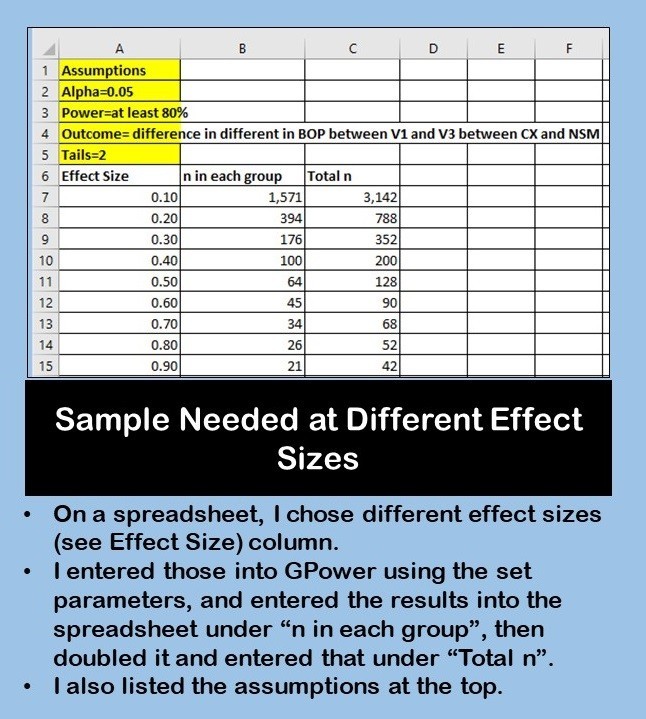

Since BOP is in a range from 0 to 1.0, I decided to start by calculating sample size for different effect sizes that could only range from 0 to 1.0. I assembled these effect sizes on a spreadsheet, along with the assumptions I was entering into GPower with my calculation, and filled in the spreadsheet.

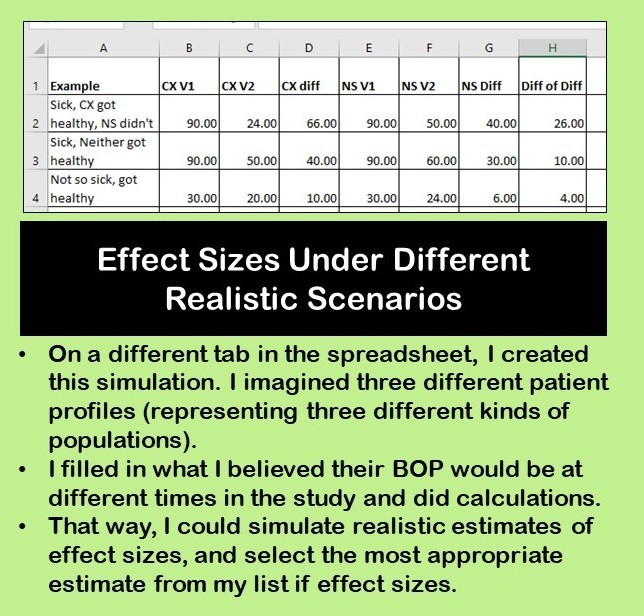

But then, I realized I didn’t have any realistic idea of what effect sizes could even be. So I decided to do a simulation on a different tab in the spreadsheet. In this first simulation, I made up three imaginary patient scenarios, and tried to simulate what their BOP would be (and change in BOP) from visit to visit.

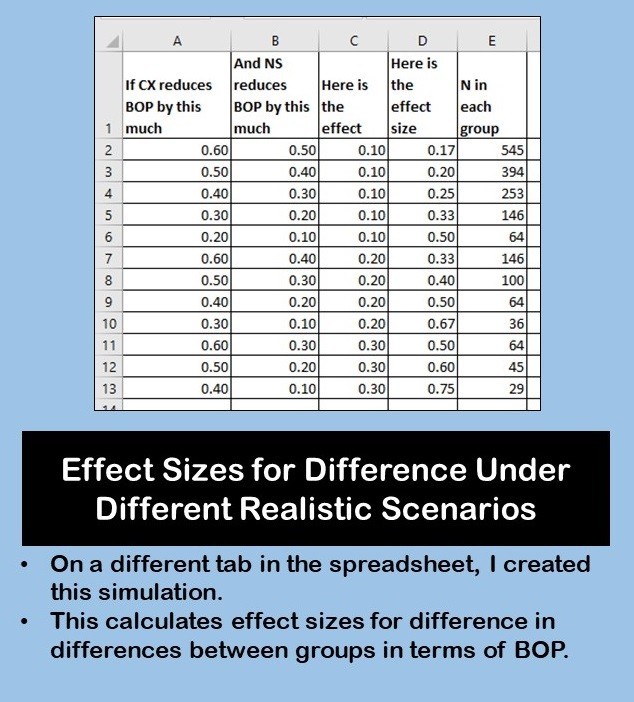

This gave me a more realistic idea of what to expect in real patients. I also wanted to calculate effect sizes for differences in BOP – again, another simulation. I did that on this spreadsheet tab shown here.

Interpretation – What Choice Should We Make?

In this GPower case example, we could look at the “n in each group” column from the first spreadsheet tab, and also consult the second simulation and look at “n in each group” column in that one. I believe I advised the PI that if she wanted to be safe, she’d get 50 in each group (total = 100). If you look at the bottom four scenarios in the second simulation, you’ll see 50 is more than or close to these estimates.

However, their clinic did not have that many gingivitis patients, so we thought it might take a long time to get that many. I figured if we lowered it to 30 in each group, we could collect data from those 30, then run the numbers and see what we got. If we felt we needed more sample, we could recruit more at that point. If NSM just didn’t work, we’d eventually be able to tell.

Monika’s General Rule of Clinical Power Calculations

As a general rule, there really should not be less than 30 people per group in a clinical study. No one can convince me that 10 or 12 or 14 people in a sample is scientific when it comes to healthcare studies. There is too much unmeasured confounding, bias, and measurement error. As my professor Dr. Yiliang Zhu at the University of South Florida put it to us students once,

“Do you really believe these 10 people adequately represent this whole population?”

I can probably force out a “yes” or a “maybe” if the number in that sentence is “30”, but I would not trust anything lower than that, personally.

What about you? Do you have any rules you follow when you have power calculations? Feel free to make a comment and share with all of us!

Updated November 13, 2023 – added video and banners.

Read all of our data science blog posts!

GPower case example shows a use-case where we needed to select an outcome measure for our study, then do a power calculation for sample size required under different outcome effect size scenarios. My blog post shows what I did, and how I documented/curated the results.