Benchmarking runtime in SAS is often done with respect to data import and editing, but it’s relatively easy because of some of SAS’s features. First, SAS has an ongoing log file that automatically outputs a lot of information about each run, including runtime. Second, in SAS, where you place a “run” command tells SAS when to essentially start and end runtime sessions, so the log produces metrics consistent with run command placement. Third, SAS as a language does not efficiently support objects other than datasets, in that using other SAS objects – such as arrays and variables – is a somewhat costly and involved process. Therefore, benchmarking runtime in SAS consists mostly of data collection off of the log file.

Benchmarking Runtime in Most Other Programs

Benchmarking runtime in other data software (such as R and SQL) requires a different approach, because there is not a continuous running log file such as we have in SAS. Instead, benchmarking runtime in other programs generally goes like this:

- Develop the code you want to time, and make sure it runs properly.

- One line before that, insert a line of code that creates a variable that captures the system time as a value before the code runs.

- Insert a similar line of code after the code you want to time that creates a variable that captures the system time as a value after the code runs.

- Using your two variables, subtract the before time from the after time, and you will get the total run time.

Again, in many instances, SAS will literally do this for you, and paste the results in the log file for you to harvest.

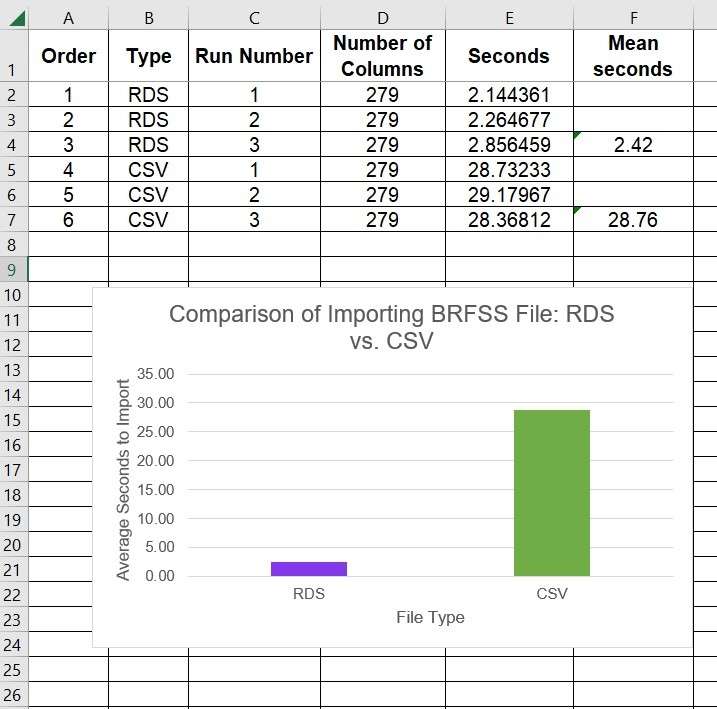

Benchmarking Runtime for Importing Data

A typical use-case for benchmarking runtime is for importing large datasets. To demonstrate this, I took a large dataset – a BRFSS dataset – and converted it to two data formats: R’s native *.rds format (“BRFSS_a.rds”), and *.csv (“BRFSS_a.csv”). Then, I did an example of importing both of them into R GUI and benchmarking the runtime each time. We would expect that the *.rds would be imported much faster than the *.csv, given that *.rds is R’s native format – but we will see for ourselves by calculating runtime for each situation.

First, I looked at the runtime for importing the *.rds version of the file with this code:

start_read_rds <- Sys.time() Large_RDS_df <- readRDS(file = "BRFSS_a.rds") end_read_rds <- Sys.time() RDSTime <- end_read_rds - start_read_rds RDSTime

In this code, the variable start_read_rds is set to the system time (Sys.time()) before reading in the dataset, and the variable end_read_rds is set to the system time after reading in the dataset. Then, the variable RDSTime is calculated by subtracting the start time from the end time, and displays on the screen. I actually ran this code three times on my laptop, and each time, I got a value of a little over 2 seconds.

Now, let’s look at the code where I read in the same dataset in *.csv format on my laptop using R GUI. We expect this to take longer – but how much longer? Here is the code I used.

start_read_csv <- Sys.time() Large_CSV_df <- read.csv(file = "BRFSS_a.csv") end_read_csv <- Sys.time() CSVTime <- end_read_csv - start_read_csv CSVTime

As you can see in the code, I used the same approach with the system time, setting start_read_csv to the system time before reading in the dataset, and setting end_read_csv with the system time at the end. I used the read.csv command to read in the dataset BRFSS_a.csv and create dataframe Large_CSV_df. Then, I do the substraction, and create CSVTime as the final runtime.

If you want to do this yourself, check out the Github code here. I didn't include the big BRFSS datasets because they were too large, but the code should work on any input dataset you have - just edit the code to change the name.

Note: The readRDS() command does not have a period in it, and the read.csv() command does – just to drive you crazy.

Visualizing Benchmarking Results

When I ran the *.csv code three times, each time, it took at least 28 seconds. As you can tell from just my writing, this is a lot slower than reading in the *.rds. But how do we compare?

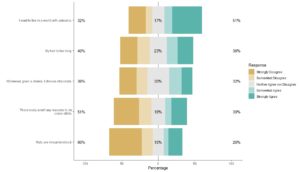

One way to compare is to just make a simple bar chart. This graphic shows that I took three runtime measurements all right next to each other (same environment, same time), averaged them together, and just made a bar chart comparing the averages.

But this is a very simple case. At the US Army, I actually did a test like this (between MS SQL and PC SAS) for different sized datasets. I had a dataset with a million rows, one with 2 million, one with 4 million, etc. I had average runtimes for SQL vs. SAS at these different levels: 1 million rows, 2 million rows, 4 million rows, etc.

This structure of data lent itself more as a time-series display (line graph), with the number of rows of the dataset along the x-axis, and the average time along the y-axis. In that case, MS SQL clearly outperformed PC SAS, and at larger datasets, we could see we were wasting 30 minutes per so many million rows by using PC SAS vs. MS SQL.

The reason I was doing this study was to try to advocate to switching from PC SAS to MS SQL. The arguments against switching were 1) my boss was friends with SAS, and 2) SAS was giving us free licenses. The problem was that the licenses were “costing” us on the other end, in consulting fees. My $95/hour SAS consultant was frustrated playing solitaire all day while we were trying to import millions of rows into PC SAS.

So when I did this study and saw the results, I thought my boss would be happy to switch to MS SQL for storage of our data lake, because we could then use our SAS consultants more efficiently. The SAS consultant who was helping me with the benchmark study was happy to use SQL, although she admitted she needed to get good at it, and there would be a learning curve. No problem! That would have been much cheaper than what we were doing.

But alas, the boss was anti-woman. He did not like the idea of women (me and the consultant) making recommendations. He instead ended the data lake project. I later learned this is not unusual when women gain power in tech – that they are not supported professionally, and have to overdefend their plans and requests for resources.

Environment is Important When Benchmarking Runtime

When I worked at the US Army, I came in early one morning, and benchmarked runtime on loading a dataset into my data lake using the desktop in my office. Later in the day, I ran the same code, and it was very bogged down. It really made a difference. I went around asking people at the location why they thought it was bogged down in the day. More people were on the network in the middle of the day, but we were the US Army – shouldn’t we have so much power that that didn’t matter?

I talked to some people at SAS and at the Army, and they pointed out that it actually also mattered what desktop I was using to launch the code (even though the code was executing on our network). If I launched code from the desktop in our conference room, I may get different results from the desktop in my office. So they strongly recommended to me to minimize the variables in the environment when running the code multiple times and measuring runtime.

This led me to ask: What is the “correct” environment? Theoretically, the “correct” environment would entail launching the code from the desktop of our data analysts during the day when they are working. I didn’t want to bother them, so I just used my own desktop, but chose to launch the code within their working hours.

Updated December 13, 2022. Revised banners June 18, 2023.

Read all of our data science blog posts!

Benchmarking runtime is different in SAS compared to other programs, where you have to request the system time before and after the code you want to time and use variables to do subtraction, as I demonstrate in this blog post.