Classification crosswalks are useful if you want to reduce the number of levels in categorical variables you want to analyze. In biostatistics, we would say that a categorical variable with 77 levels would have too many levels for us to analyze, and we’d want to reduce the levels. If we were doing a chi-square analysis, even 10 levels would be too many. In data science, we would say that 77 is too high of “cardinality” for this variable, and we’d want to reduce the cardinality. Either way, classification crosswalks can help you.

Classification Crosswalks Example

This example comes from me helping one of the members of our online data science mentoring program. She was thinking of doing a study of pharmacy claims data from the Medicare Part D Prescribers online database.

We read a report we found online based on prescribers of pain medication in New Hampshire (NH), so she downloaded the NH data from this database. Our next step was to make classification crosswalks – but we ended up changing the research question, so we never completed this step. I decided to use data from this download to demonstrate on this blog post how to make classification crosswalks for these data so you can do something similar for your portfolio project!

Why We Need Classification Crosswalks

We were doing our initial data curation work in Microsoft Excel. If you want to download the spreadsheet, go to my GitHub here and look for “Provider Crosswalk”.

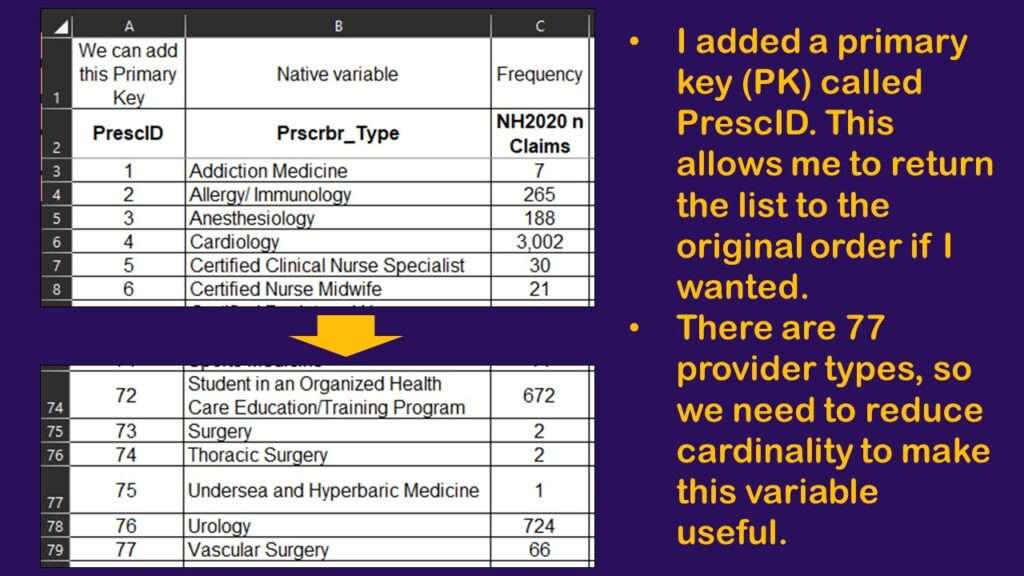

The mentoring program participant exported a spreadsheet of count data. The counts were number of providers from NH who prescribed a certain pain medication in 2020 (a query filter had been applied). The output included just the provider types that were responsible for any claims (e.g., had a frequency of claims greater than 0). The variable Prscrbr_Type was the native name of the provider type descriptor column. I made a graphic to summarize the output.

As you can see in the graphic, I added a primary key (PK) I called PrescID, and this is just a sequence of numbers from 1 to 77 (the number of rows). That way, I could sort the spreadsheet by any column, but then sort it by PrescID to get it back to its original order.

So, we had too many provider types – namely 77. The topic was pain medication, so I know that it is logical for certain types of providers – like surgeons – to prescribe pain medication. But it’s not normal for mental health providers to prescribe pain medication. I thought about that for classification purposes.

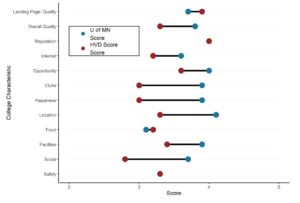

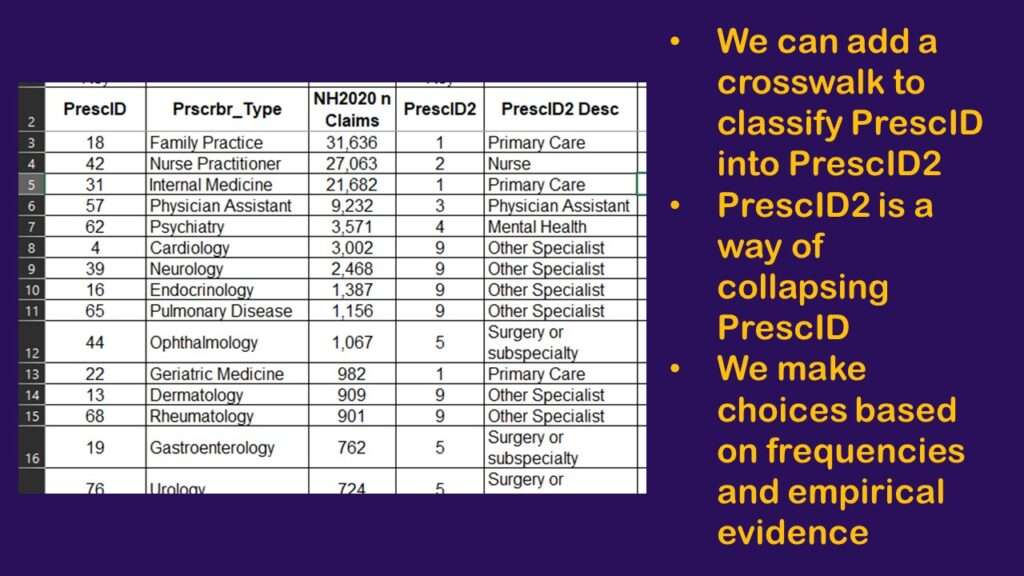

Now that I had my PK, I sorted the data by the frequency of claims from most to least. Using the empirical logic I was talking about earlier, plus the frequency information on the spreadsheet, I created a new foreign key (FK) called PrescID2 (with a description column called PrescID2 Desc), which is what you do with classification crosswalks, so I could collapse these provider types into these smaller categories, as you’ll see in the graphic.

As you can see in the graphic, I classified both Family Practice and Internal Medicine under Primary Care (PrescID2 = 1). I coded it 1 on purpose – because I wanted that to be the first category presented each time I did data analysis using PrescID2. How I knew what to code under primary care comes from my background, as well as lists online of what professions can be classified into what classifications.

Also, it was important for me to keep “nurse” and “physician assistant” separate. It is not shown on the graphic, but other nurse entries were also coded as “nurse” – but “physician assistant” remains alone. Those will be very big categories that I kept separate because they have different training pathways. In other words, if any of these groups were prescribing “too much” pain medication, there could be a training intervention. Again, these classifications are based on my empirical knowledge, as well as what frequencies I was seeing in the data.

Others may argue with my classifications, and even with my coding – but if I’m doing a portfolio project for data science, my focus is just keeping documentation of what I am doing so I can share it.

Quick Analysis with a Pivot Table

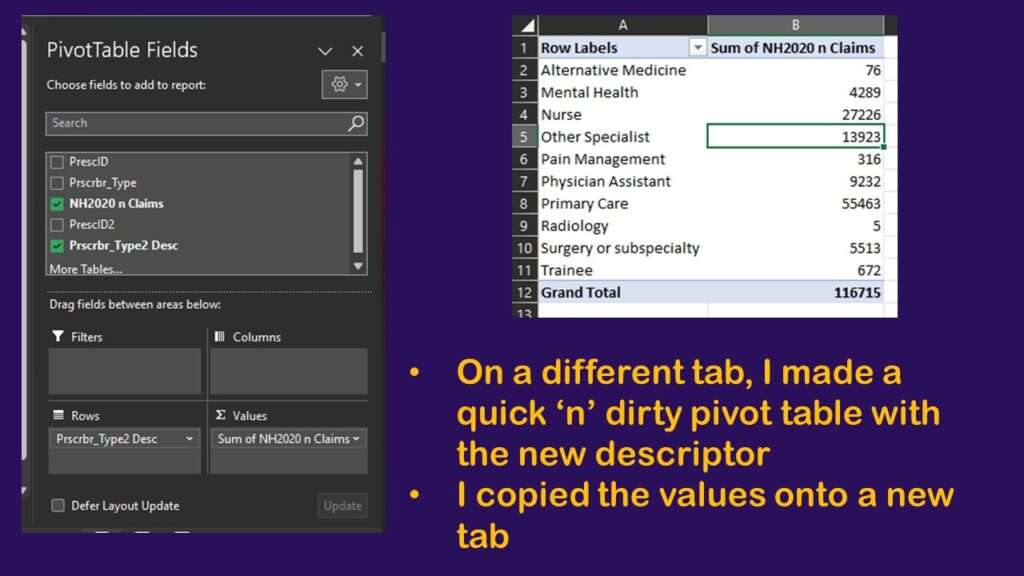

Those of you familiar with Excel might know about how to make a pivot table. I don’t like to use them except as “temporary calculators”. What I mean by that is I will make a tab on an Excel spreadsheet called “Pivot Table”, and then use it to make calculations which I copy out of there and “paste values” into some other tab. That’s because pivot tables change as you adjust them – they are essentially queries that update as you change the criteria. So I treat them that way.

I made a tab called “Pivot Table” and then set up the pivot table as you can see in the graphic.

As you can see, working with classification crosswalks allowed us to reduce the cardinality from 77 to 10. Was that good enough? I had to first copy the values out of the pivot table and paste them onto another tab. I made a tab called “analysis” and put the data on that tab.

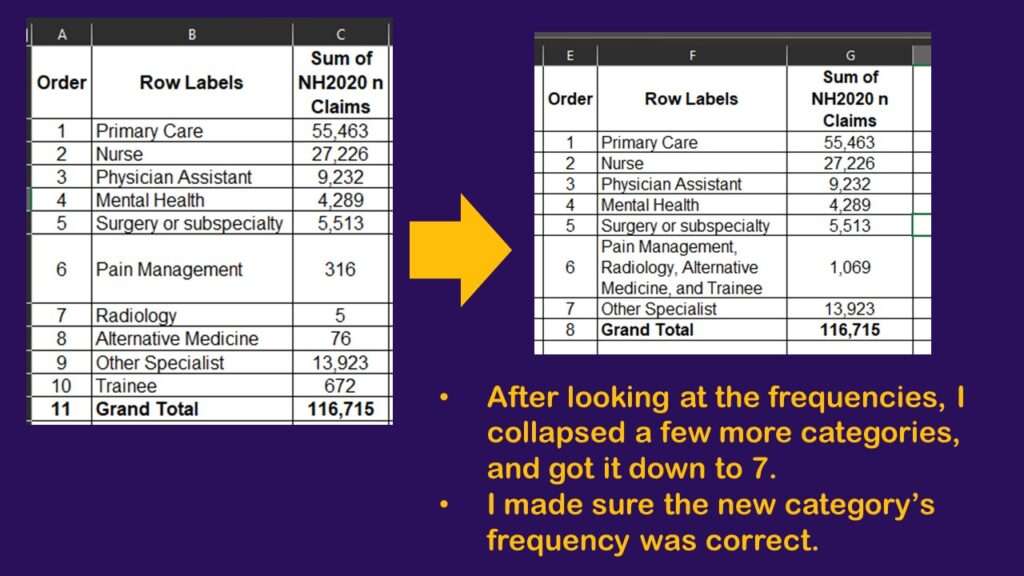

After taking a look at the new frequencies in the collapsed categories, I decided the cardinality was still too high. I further classified them down into 7 categories, as you can see in the graphic.

As you can see in the graphic, after copying the data out of the pivot table, I added an “order” column and added PrescID2 to it. That way, I could sort it and get it in the order I wanted. That made it easy for me to decide what to further collapse. As you can see, I put four of the categories together. I just changed what it said under Row Labels, and I used the addition function in Excel to add up the number of claims.

Classification Crosswalks: Purpose for Portfolio Project

The original plan to do a portfolio project on these claims data is what prompted this data curation effort in the first place. The purpose of doing these classifications was that we wanted to choose a provider type to focus on. The original topic was to look into what prescribers might be prescribing pain medication when they should not be.

So in other words, doing this classification was actually part of curating the data and planning for a portfolio project. It wasn’t the project itself. Therefore, using Excel to produce some results was not a bad idea. Excel can produce simple results quickly that can allow you to make study design decisions.

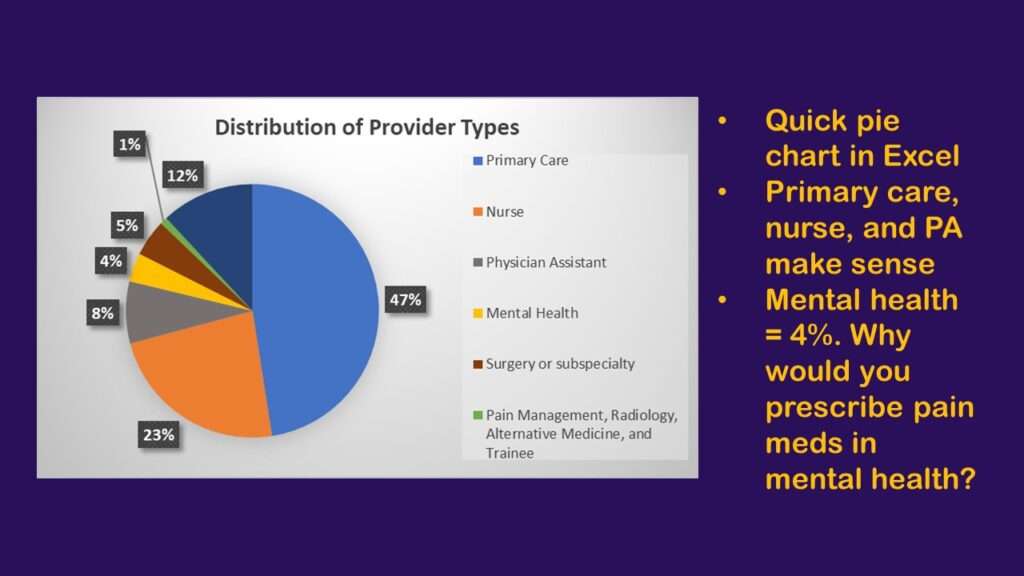

For example, I threw together a pie chart based upon our final classifications.

In this pie chart, the largest three pieces – prescriptions written by primary care, nurses and physician assistants – make sense. But mental health practitioners prescribing pain medication? That doesn’t look right to me. Maybe it’s fine, but I think that would be an interesting topic to examine.

This is where I’d do a deep dive, and try to figure out what is going on. That is an example of a topic that could be further explored in a data science portfolio project.

Added video January 19, 2024.

Read all of our data science blog posts!

Classification crosswalks are easy to make, and can help you reduce cardinality in categorical variables, making for insightful data science portfolio projects with only descriptive statistics. Read my blog post for guidance!