Dataset source documentation for a data science project is really necessary if you have a complex set of source datasets that feed into your analytic dataset. You won’t have this problem if you are analyzing a simple cross-sectional dataset, like the BRFSS. You won’t have to document your source datasets because there will only be one. However, if you are have to piece together the analytic datasets from multiple source datasets, if you don’t keep track of where all the source variables came from, and all the source datasets you used, you might get confused during your analysis. Worse, if someone asks you to modify your analysis, you won’t remember what you did.

Dataset source documentation does not have to be complicated. Although you need to keep data dictionaries on all your source datasets, adding an additional simple diagram like the ones I’ll present here can be extremely helpful to anyone assisting you, or working on the same analytic team.

Dataset Source Documentation Use Case #1: Keeping Track of NHANES Data

I wrote a whole blog post on how NHANES – which is supposed to be a cross-sectional surveillance dataset, like BRFSS – actually is served up in many different tables for legacy reasons. This can get very confusing, especially if you are doing an epidemiologic analysis.

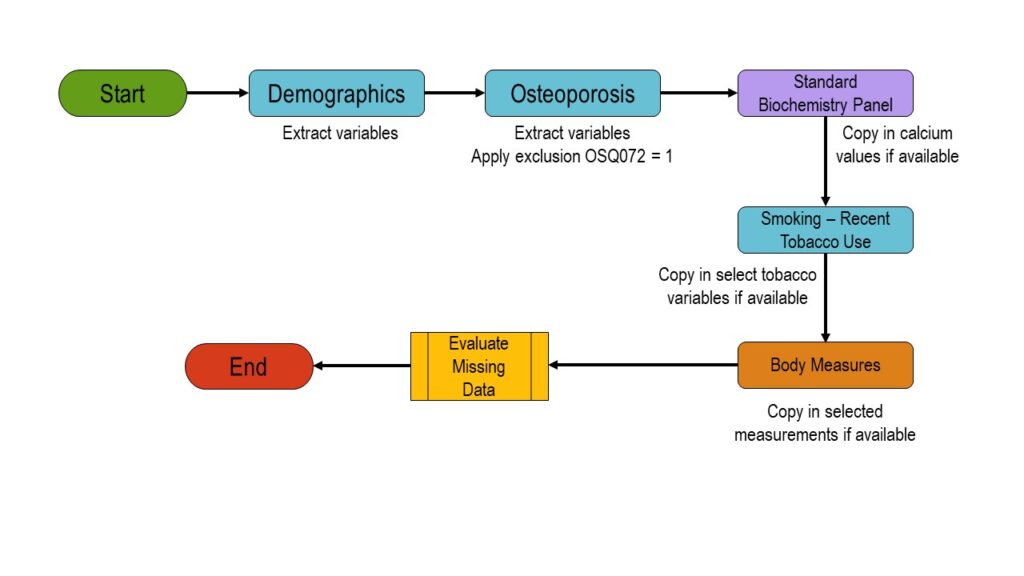

To give an example, I had a customer who wanted to study people who had osteoporosis using the NHANES data. To determine which respondents from the NHANES dataset belonged in her sample, she filtered on a question in the Osteoporosis Questionnaire dataset (asking if they took medication for osteoporosis).

The problem is that in order to do her osteoporosis analysis, she needed data from other NHANES datasets. She needed age and other demographic data from the demographic dataset, calcium levels from one of the lab datasets, tobacco use patterns from the smoking questionnaire dataset, and body measurements (especially body mass index, or BMI) from the Body Measures examination dataset.

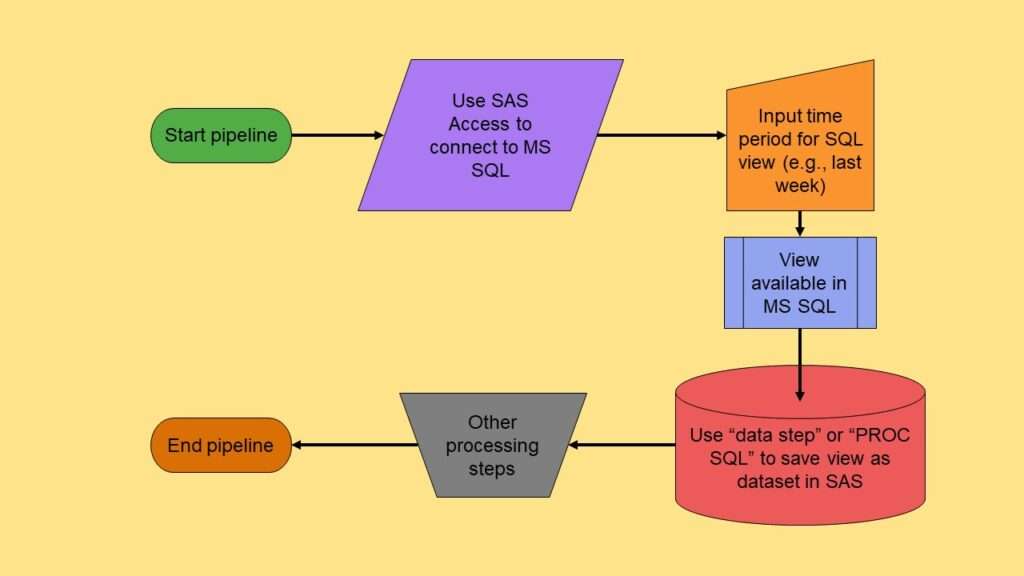

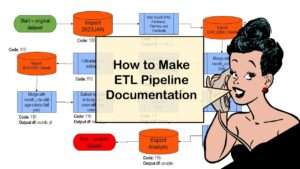

We queried the data quite a bit to figure out exactly which variables from what dataset we needed to patch together to make her analytic dataset. This graphic is a stylized version of the diagram we ended up making.

As you can see in the graphic, I use flow chart and other shapes to indicate which datasets we used, and the order in which we involved them in the building of our analytic dataset. As the project wore on, I added the following features to the diagram:

- Exact variable names we were extracting from each dataset

- Number of records with valid values on important variables at each step

- Indicators as to which code files were involved with each step

Since this was a thesis project, it was necessary to keep good records, because the customer was going to defend the thesis and write a peer-reviewed article.

This example concerned piecing together data collected for research purposes into an analytic dataset. I will give another use case where we kept dataset source documentation because we had to piece together different sets of production data.

Dataset Source Documentation Use Case #2: Assembling a Hospital Analytic Dataset

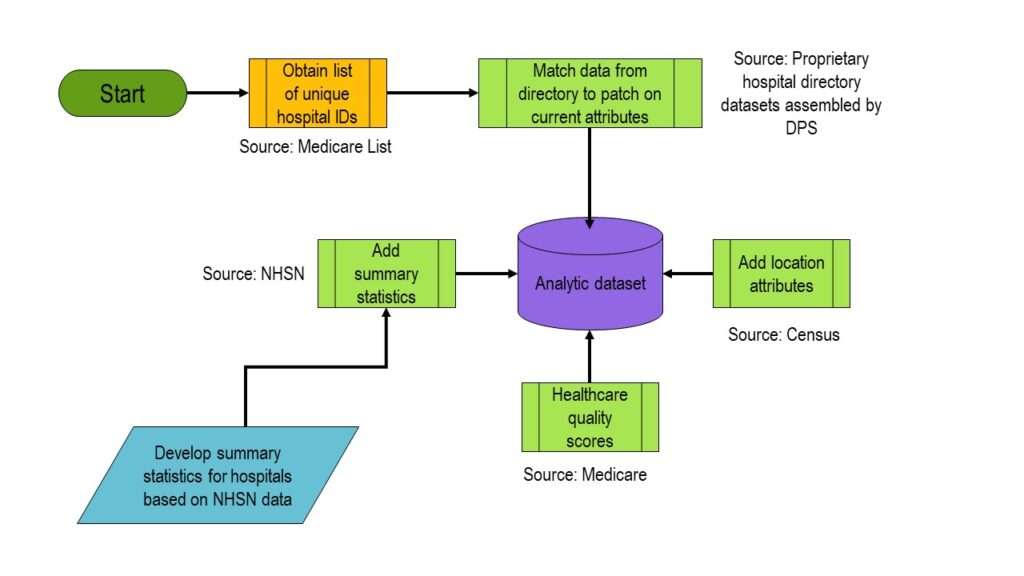

My colleague and I wrote a book chapter about open government data and dashboards in which we rebuilt a government dashboard to improve its utility. In it, we did a demonstration by constructing an analytic dataset consisting of data about hospitals we wanted to model.

To construct the dataset, which was to be a list of hospitals in Massachusetts and their attributes, we needed to start with a list with some sort of numeric identifier (because hospitals change names often due to buyouts). We found that Medicare assigns an identifier, so we got a list of all those unique identifiers. But then, we had to manually put together the data about each hospital we wanted to add. We literally had to look up the hospital web sites and abstract data from them.

We realized that we actually had data from other datasets we could patch into our analytic dataset about hospitals to supplement our manually-collected data. The hospitals were located in regions (e.g., MSAs, counties, etc.), so we could use the US census to calculate values of regional variables for each hospital, and patch those on.

My colleague also found some healthcare quality scores assigned by Medicare for the different hospitals, so we threw that in. The National Health Safety Network (NHSN) data are collected as part of a federal program; these data include other quality metrics we needed for our analysis. In fact, the NHSN has “too much information” – in other words, it has a lot of correlated quality metrics that are hard to sift through. Since my colleague and I were making a dashboard of these data, we first reduced the NHSN data about each hospital to just summary statistics before adding them to the analytic dataset.

You can read the book chapter to learn more about the front-end dashboard – but if you are curious as to how we assembled the analytic dataset, you can look at this graphic I made which serves as dataset source documentation.

If you read my narrative and then look at the graphic, you can see why I made the graphic. It essentially depicts how I pieced the analytic dataset together – what data I used, and how it got incorporated into the analytic dataset. Keep such documentation helps anyone evaluating the results of the analysis to have a quick visual understanding of the provenance of the underlying datasets.

Read all of our data science blog posts!

Dataset source documentation is good to keep when you are doing an analysis with data from multiple datasets. Read my blog to learn how easy it is to throw together some quick dataset source documentation in PowerPoint so that you don’t forget what you did.