“AI on the edge” is not a term I was familiar with when I listened to the podcast, “A Conversation with Marc Staimer of Dragon Slayer Consulting,” where Marc was being interviewed by BrainChip Vice President Rob Telson. To be perfectly honest, I was most interested in the fact that Marc’s business was called “Dragon Slayer” Consulting – I was thinking that if he really wanted to do some wicked “AI on the edge” he really should team up with the DethWench!

But in all seriousness, by the end of the podcast, I was thinking maybe he should team up with me in real life. This is because this thing called “AI on the edge” is basically a huge data storage problem I didn’t know about until I heard the podcast. Let me explain the problem as I understand it from what Marc said, then provide what I believe could theoretically solve it.

AI on the Edge: What Does that Mean?

I’ll explain to you what I understand “AI on the edge” to be after listening to Marc explain it in the podcast. Imagine you have a highly-technological object that is producing a lot of data that can be collected – like a Tesla car. The Tesla will have data coming from sensors (e.g., cooling system, speedometer, fuel use), and also coming from video (e.g., back of car, driver’s view). You will undoubtedly have other data streams, but those are a few ones that come to mind. (Personally, I take public transportation and walk…)

The trick is that those data are all produced at the Tesla, and are stuck there until they are moved. The data are stuck at the physical car. I would have called that “local” data, but what Marc calls it is “data on the edge” – meaning that the data are stuck at the site of where the data sources are.

Now let’s say you want to use those data in an artificial intelligence (AI) equation – like the airbag wants to know whether to deploy or not. Obviously, you need to take that “data on the edge” and do your “AI on the edge”. Marc’s point is that you really don’t have time to send the data back to some warehouse, grind them through a little extract-transform-load (ETL) protocol, serve them up to the AI engine, get the decision result from the AI engine (deploy or not deploy), and send that back to the Tesla’s airbag! It would all be over by then! (Which is why I take public transportation and walk…)

Solving the “AI on the Edge” Data Storage Problem

I could tell from listening to Marc that he was expecting people like me – data warehouse designers – to solve the data storage problem. In my opinion, he sounded a little exasperated by the problem. I admit, I felt like it sounded unsolvable when he was talking about it! Think of all that data on the Tesla. Even if you could store it all locally – er, “on the edge” – how could you do the minimum necessary ETL and feed it into an AI equation while you were having a car accident, and have it return a result in time for your airbag to intelligently deploy? I was starting to have more appreciation for the recent Tesla car accidents we hear about that people suspect are due to faulty AI deployment.

Theoretical Solution to the “AI on the Edge” Data Storage Problem

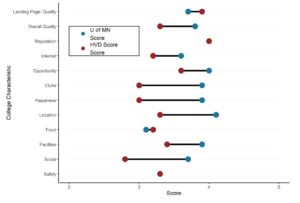

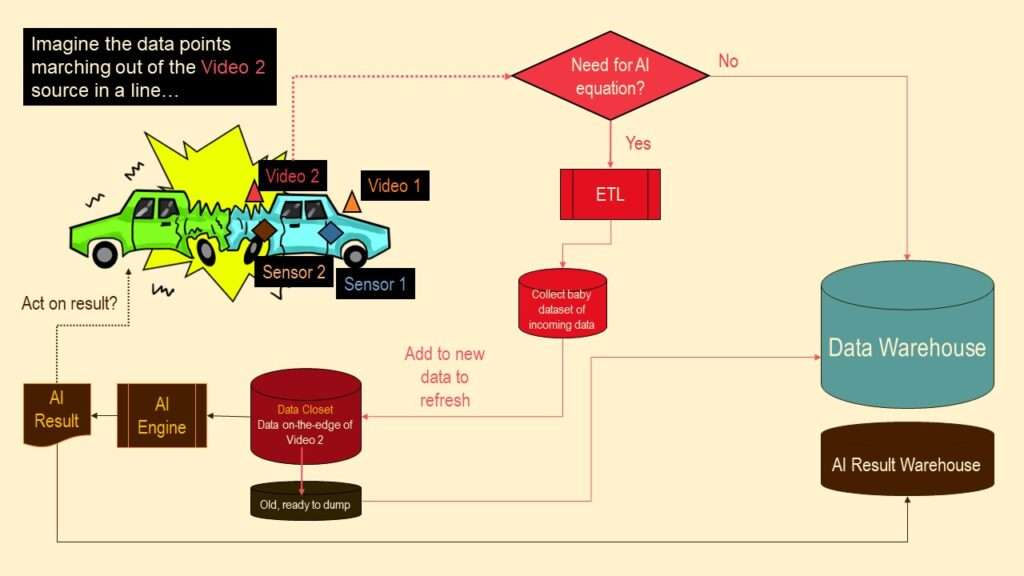

Well, here is my theoretical solution. Let me describe it in two diagrams. Here is the first one.

Let me explain this diagram for you. Here, we are having a car accident. The blue car on the right is the Tesla. I have labeled four data sources: two sensors in the car (Sensor 1 and Sensor 2), and two video feeds (Video 1 and Video 2).

In this diagram, we will concentrate on just one of those data sources – Video 2. Let us think about video data, and how it moves through the pipeline in packets. I made a dotted line because I want you to imagine each packet marching out of the Video 2 source one by one. The packet gets to a routing mechanism, which decides whether or not any data from Video 2 needs to be included in an AI equation. Basically, I assume all of the sources will have at least some data that is needed for AI on the edge, but each piece of data will need to be evaluated, because you only want to keep the data needed for the AI equation.

The data that are not needed for the equation can be lazily sent to a warehouse off site. But the data needed are copied off, go through the ETL needed to reshape it for the “data closet” (I think this is what Marc meant when he said that term in the podcast). I think we are using the term “closet” because it is little, and we really don’t have much storage space at the physical car. (Although I wish he’d have used another word, because it is Pride month right now, and we really do not need our data to be in the closet!)

I call this a “Monte Carlo data closet”. I guess I am creative. I call it that because I envision doing essentially Monte Carlo sampling on-the-fly. A baby dataset of incoming ETL data to feed the AI algorithm is added to the closet, while old data of the same size is stripped off of the closet and sent to the data warehouse. That way, we don’t run out of room in the closet. (Note: This is not my actual clothing closet, which has no room left!)

Then, the new refreshed Monte Carlo dataset is sent from Video 2’s data closet to the AI engine (which is “on the edge”) for the equation. The result comes out – deploy airbag or don’t deploy airbag – and the result is sent back to the AI result warehouse for safekeeping. That way, we can go to the warehouse and recreate anything we need later, after the accident.

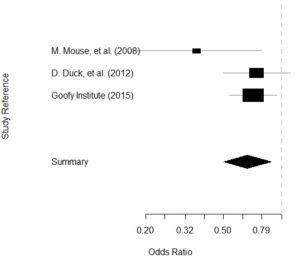

Envisioning my Solution to the “AI on the Edge” Data Storage Problem

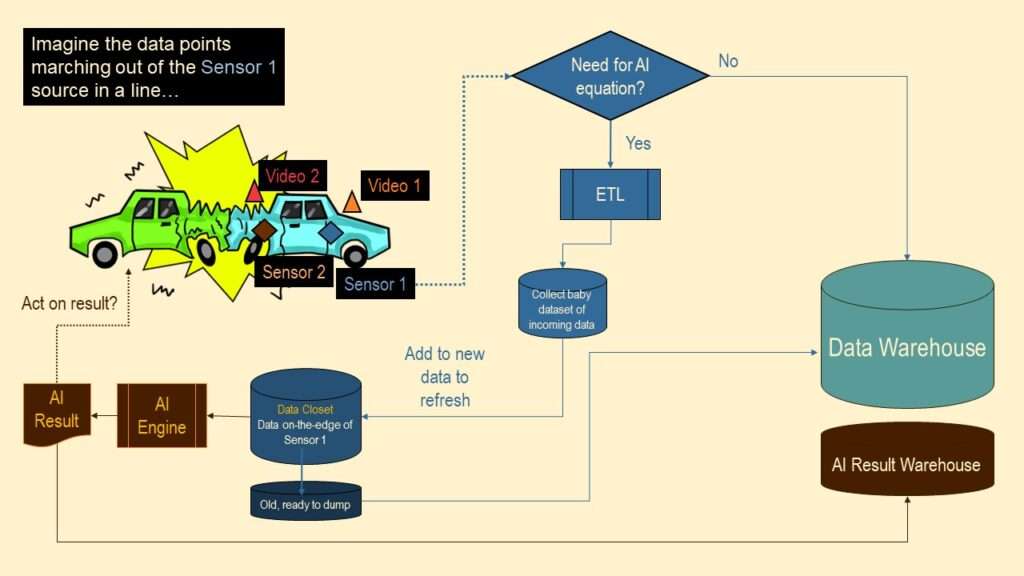

I wanted to make a second diagram for you to compare to first diagram so you could see what was specific to the data source (Video 2) and what is used by all the data sources. In this one, instead of the data from Video 2, we follow the data from Sensor 1.

Sensor 1 has its own data closet that just holds the ETL-prepared data needed from Sensor 1 for the AI engine. But as you can see, the AI engine is using data from the data closets from each sensor. This solves the issue that Marc was pointing out, which is being able to get the AI result quickly locally so the decisions can be used ASAP.

Marc was concerned about the AI engine having the data available and being able to chew through it locally fast enough so that a decision could be acted upon. I agree with Marc, but my concern is that any local solution won’t be replicable. This is solved in my design by the warehouses off site that store the results so they can be retrieved in the future.

Updated June 5, 2022. Added banner October 26, 2022. Revised banners July 10, 2023.

Read all of our data science blog posts!

“AI on the edge” was a new term for me that I learned from Marc Staimer, founder of Dragon Slayer Consulting, who was interviewed in a podcast. Marc explained how AI on the edge poses a data storage problem, and my blog post proposes a solution!