R for logistic regression often does not come up as a topic in public health, especially when you are taking courses for your master’s degree. That’s because SAS is so good at logistic regression, professors and others often do not really think about teaching R for logistic regression.

Why R for Logistic Regression?

Even though SAS is good at it, there are many arguments for using R for logistic regression. First, R results are identical to SAS results out to many more decimals than most people report, so there are no issues with different results from different software. Second, it is much easier to load external datasets of most sizes into R GUI or RStudio interfaces compared to any interface in SAS (even SAS ODA). Third, once your dataset is in R, it is easier to utilize R’s functionality to create objects to do fancy things like graphing with the resulting model.

The main downside to R for logistic regression has to do with the fact that it is sort of a piecemeal operation. In public health, we care about odds ratios (ORs) and their 95% confidence intervals (CIs), which means we care about not having the resulting slopes from the logistic regression equation remain on the log scale. SAS’s PROC LOGISTIC lets you add the /risklimits option to do this, and it comes out on the output. But in R, this is a manual operation!

Using R for Logistic Regression: 6 Steps

Here are the steps I will go over.

- Prepare the analytic dataset you will use for the logistic regression equation. Designate an outcome variable, and make a list of candidate covariates you will consider including in the model based on model fit. We are assuming you will do multiple iterations as you fit the model; this tutorial just demonstrates presumably the first iteration.

- Create a glm object in R. This object contains the estimates resulting from the model (slope, y-intercept, etc.).

- Turn the object into a tidy object. This essentially converts the glm object to a dataframe – basically, a dataset in R that has the slopes, y-intercept, and the other metrics as actual editable and graphable data values.

- Edit the tidy object to add other data. This is where you basically run data editing commands on the tidy dataframe to add the OR and confidence limits as data values in the dataframe.

- Export the tidy dataframe. If you export it as a *.csv. you can open it in MS Excel, and graph the contents by hand, make tables of the data, import these results into another program, or anything you want!

- BONUS! Make a coefficient plot! I show you how to make a quick and dirty one that can help you develop an interpretation.

The dataset I am using to demonstrate this is from my LinkedIn Learning courses, but you can access it here on GitHub – download the dataset named BRFSS_i.rds (the last one in the folder). The data originated from the BRFSS.

Next, if you want the code I demonstrate below, go to this folder in GitHub and download the code titled 100_Logistic example.R.

Step 1: Prepare the Analytic Dataset and Modeling Plan

If you put the dataset in a folder, open R, and then point R to the directory with the dataset in it, you can run the following code.

BRFSS <- readRDS(file="BRFSS_i.rds") colnames(BRFSS) nrow(BRFSS)

This will create the dataset BRFSS in R, and show you the column names with the colnames command, and the number of rows with the nrows command. There are about 58,000 rows in the dataset and several different columns. We will just make up a hypothesis for this demonstration.

If you look at the results from the colnames command, you’ll see there is a variable called NOPLAN which is binary (1 or 0). This means that the respondent has no health insurance plan if it is a 1, and if it is 0, they have a plan. In the US, not having a plan means you pretty much do not have access to healthcare, so NOPLAN = 1 is a bad exposure. That’s what we will be modeling as our hypothesized exposure in our logistic regression model.

Our outcome variable is POORHLTH, which is an indicator variable for the respondent indicating that their health was “poor” on the general health question (e.g., how the respondent would rate their own health). If they said their health was “poor”, the variable has a value of 1, and if they said anything else, it is 0.

So the hypothesis is that after controlling for education level, having NOPLAN puts you at higher risk for having POORHLTH. For education level, you will notice there is a categorical variable called EDGROUP. This is a recode of native variable EDUCA. As you can see if you run a crosstabs (table command), LOWED is an indicator variable for the lowest education level (less than high school and high school graduates), and SOMECOLL is an indicator variable for having attended some college or vocational school without graduating. The highest level of education in EDUCA and EDGROUP then serves as the reference group in the model when these two indicator variables are used as covariates.

Now that we have our hypothesis and our analytic dataset (and presumably our modeling plan), let’s proceed to modeling.

Step 2: Create the Model

Like SAS, R has a “base”, and like with SAS components, you have to add R “packages” to get certain functionality. Luckily with R, the command for the general linear model (glm) is in the base. So, we will just call it with our code.

LogisticModel <- glm(POORHLTH ~ NOPLAN + LOWED + SOMECOLL, data = BRFSS, family = "binomial")

As you can see, this code sets up our glm command, specifies the model equation in the argument, specifies the dataset BRFSS as an option, and sets the family option to binomial” so we get a logistic model.

In the code above, we save the results of our model in an object named LogisticModel. If you just run the code to the right of the arrow, it will output results to the console in R GUI, but if you specify for it to save the results in a model like we do above, then you can run code on that model.

If you just run the glm code on the right side of the arrow, you will see that the output gives you the values of the intercept and slopes, and some model fit statistics (including the Akaike Information Criterion, or AIC). One reason why you want to create an object is that you can run the summary command on the object and get a lot more information out of the object, as we do here.

CODE:

summary(LogisticModel)

OUTPUT:

glm(formula = POORHLTH ~ NOPLAN + LOWED + SOMECOLL, family = "binomial",

data = BRFSS)

Deviance Residuals:

Min 1Q Median 3Q Max

-0.4417 -0.4351 -0.3550 -0.2732 2.5716

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -3.26924 0.03603 -90.726 <2e-16 ***

NOPLAN 0.03156 0.08470 0.373 0.709

LOWED 0.95962 0.04421 21.706 <2e-16 ***

SOMECOLL 0.53673 0.04789 11.208 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 26832 on 58130 degrees of freedom

Residual deviance: 26321 on 58127 degrees of freedom

AIC: 26329

Number of Fisher Scoring iterations: 6

Step 3: Turn Model into Tidy Object

Let’s observe two things about that output above. First, we see that our first covariate – NOPLAN – was not statistically significant. In real life, we’d probably have more variables than just education to introduce in the model, so you can imagine we would start iterating our models and trying to see if there was more variation not accounted for by the covariates we have. We have the AIC to help us. So we are off to a good start.

But second, we will want to document that we ran this model. We will want to keep track of what happens when you run this specific model as part of modeling for this project. So we will want to get the data that is displayed in R’s console out of R, and into MS Excel so we can make tables out of it, or otherwise have the raw values of the slopes to graph and consider in what I like to call “post-processing”.

This is where I like R better than SAS. It isn’t actually that hard to do this “model – calculate – export – import into Excel” pipeline in R. And the reason I added “calculate” to that pipeline is because if you are going to export the model statistics, why not add the ones you want before export it? Why not add the OR and the 95% limits, too? So that’s basically the big picture of what we are doing next.

In Step 3, we will just turn this model object into a tidy object so it will become an editable dataset called a dataframe. For that, we need to first install and load two packages – devtools and broom. Then we just run the tidy command on our LogicModel object. In the code, I save the resulting object under the name TidyModel.

library (devtools) library (broom) TidyModel <- tidy(LogisticModel)

If at this point, you just run the TidyModel object, you will see it looks in the R console a lot like normal SAS output from logistic regression, with the slope estimate, the standard error, the value of the test statistic, and the p-value. It’s in tabular format, so there are no headers and footers with model fit statistics and whatnot.

Step 4: Add Calculations to the Tidy Object

At this point, TidyModel is a dataframe in tibble format. The first row has the data about the y-intercept, and each other row has data about the slope on that row for that covariate. Since we have one intercept and three covariates, we have a total of four rows.

Now, all you statistics buffs will recognize what is going on here, in this next code!

TidyModel$OR <- exp(TidyModel$estimate) TidyModel$LL <- exp(TidyModel$estimate - (1.96 * TidyModel$std.error)) TidyModel$UL <- exp(TidyModel$estimate + (1.96 * TidyModel$std.error))

Well, although it’s probably obvious, I’ll explain what is going on in the code. In these three lines of code, I have added three variables to this dataset: OR, LL, and UL. In the TidyModel dataset that is created from the using the tidy command in Step 3, the column named estimate holds the value of the slope on the log odds scale. So, by running the exp command on that variable, I create the odds ratio for each slope, and store it in a variable called OR. Using the same approach and involving the slope’s standard error as stored in the std.error field of TidyModel, I create the 95% lower and upper limits for the confidence intervals, and store them in LL and UL respectively. Here is what the final dataset looks like.

# A tibble: 4 × 8

term estimate std.error statistic p.value OR LL UL

1 (Intercept) -3.27 0.0360 -90.7 0 0.0380 0.0354 0.0408

2 NOPLAN 0.0316 0.0847 0.373 7.09e- 1 1.03 0.874 1.22

3 LOWED 0.960 0.0442 21.7 1.82e-104 2.61 2.39 2.85

4 SOMECOLL 0.537 0.0479 11.2 3.72e- 29 1.71 1.56 1.88

Step 5: Export the Dataset so You Can Use it in Other Programs

This is the number one reason I love R for logistic regression – you can easily export the values from your analysis. This is something that is pretty hard to do in SAS, and it’s literally a breeze in R, as you will see by the code.

write.csv(TidyModel, "TidyModel.csv")

This line of code will convert the TidyModel from tibble to *.csv format, then export it as file Tibble.csv into the folder mapped to that R session (probably the one where you put the original BRFSS_i.rds dataset that you read in in the first step). If you go look there, find it, and open it, you will see it contains the same values as shown in the output above!

BONUS! Step 6: Coefficient Plot

Here’s another advantage you have if you use R for logistic regression: Graphing! If you have made it this far, you have both LogisticModel (object that is not tidy) and TidyModel (model that is tidy).

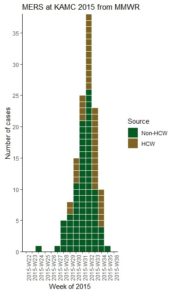

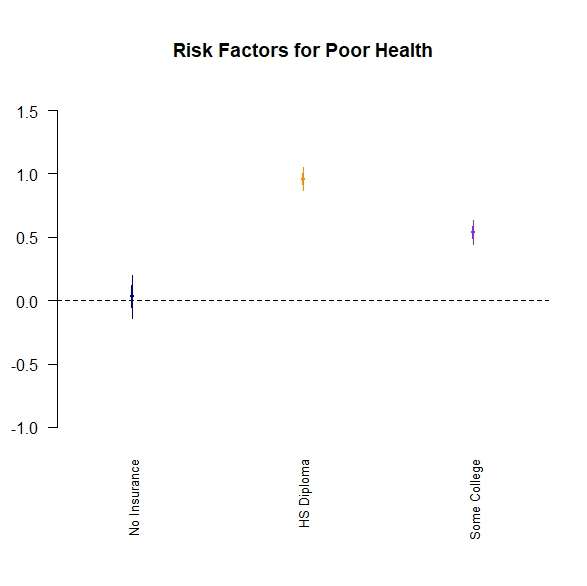

Let’s go back to LogisticModel. Let’s say you are writing about the results. It can be hard to look at a table of ORs and confidence limits to formulate your thinking, so I like to use this quick and dirty coefficient plot, which you can run on an object like LogisticModel.

Please be advised that this particular coefficient plot – from the arm package – does NOT use OR, LL, and UL in the plot. In fact, it only uses the log odds (original slope in the model), and automatically graphs two standard errors from the log odds. Nevertheless, I find it helpful to look at when trying to interpret the model, and write about it in my results sections.

Let’s look at the code.

library(arm)

VarLabels=c("Intercept", "No Insurance", "HS Diploma", "Some College")

par(mai=c(1.5,0,0,0))

coefplot(LogisticModel,

vertical=FALSE,

ylim=c(-1, 1.5),

main="Risk Factors for Poor Health",

varnames=VarLabels,

col=c("darkblue", "darkorange", "blueviolet", "darkgreen"))

First we call up the arm package. Then, I make a vector called VarLabels that has the labels of the rows – but please note that even though I include a label position for the intercept, it never shows up on the graph. The par command sets inner and outer margins for the plot that will output.

After that, you will see the coefplot command. It is run on the object LogisticModel, and by setting vertical to FALSE, we get a horizontal line of unity. We set the y limits to be logical for the log odds scale using the ylim option, and add a title using the main option. We also label our variables by setting varnames to the vector we made earlier called VarLabels, and we designate colors by setting col to the four colors spelled out in the character vector.

Here is the plot.

Updated August 18, 2023. Added livestream video September 6, 2023.

Read all of our data science blog posts!

R for logistic regression in health data analytics is a reasonable choice, if you know what packages to use. You don’t have to use SAS! My blog post provides you example R code and a tutorial!