FAERS data from the United States Food and Drug Administration (USFDA) is no better quality than any other post-market surveillance adverse event data, and they don’t pretend to be. FAERS stands for “FDA Adverse Event Reporting System”, and the Feds provide a public dashboard for all of us to use!

Even though these data are free on the web, FAERS data – like other community-reported medication adverse reaction data – include a lot of “suspect” reports (which I will define more clearly later). Nevertheless, I will make a solid case in this blog post that if you are the type of person with knowledge of and experience with adverse event data who devotes the time and patience necessary to manually classify the data, you can uncover some interesting truths with even a descriptive analysis of the FAERS data. You just need to put on your thinking cap and get creative! A project like this would make a great addition to a data science portfolio project.

FAERS Data Dashboard: Initial Approach

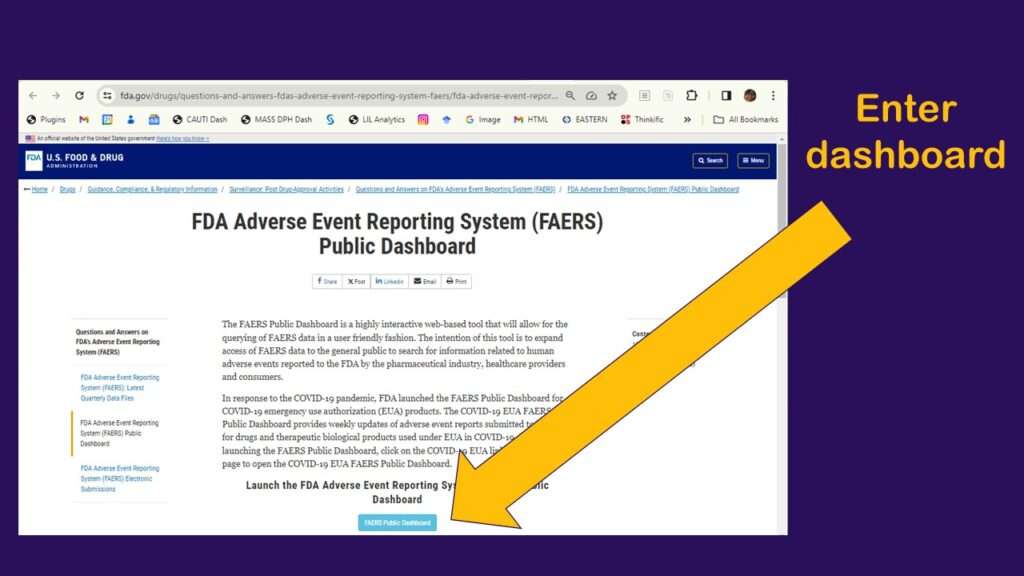

Let’s access the FAERS data in the simplest way – through the FAERS online dashboard.

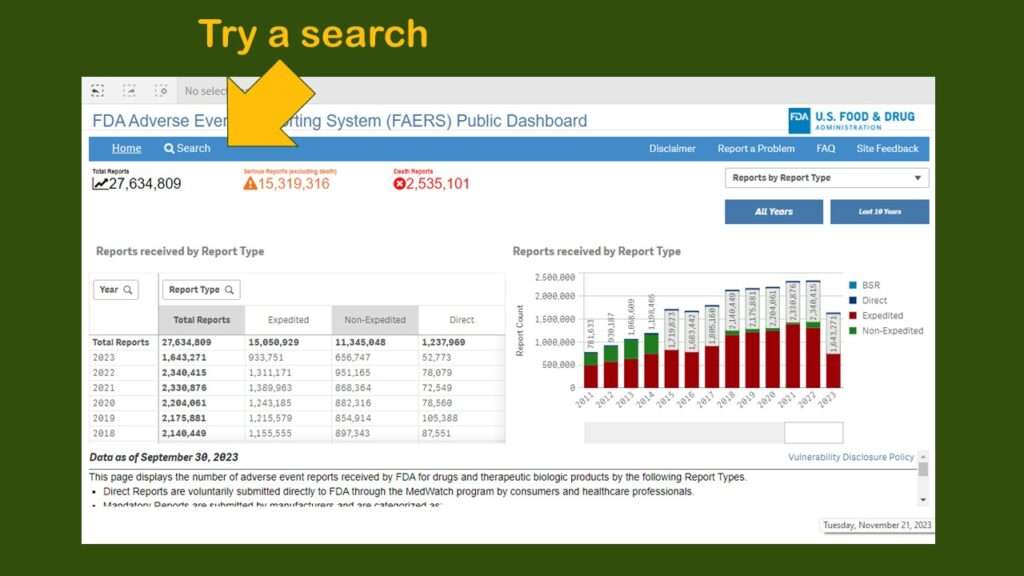

As shown in the graphic, you click to enter the dashboard, then you have to agree to a disclaimer. After that, you arrive at a web page that looks like this.

As you can see, you are presented with a very busy dashboard – and no obvious menu. I really did not know what to do next. I realize it is hard to design intuitive dashboards – but everyone is basically going to look for a menu or some buttons or something. Since I couldn’t find any, I just clicked on “search” to try a search.

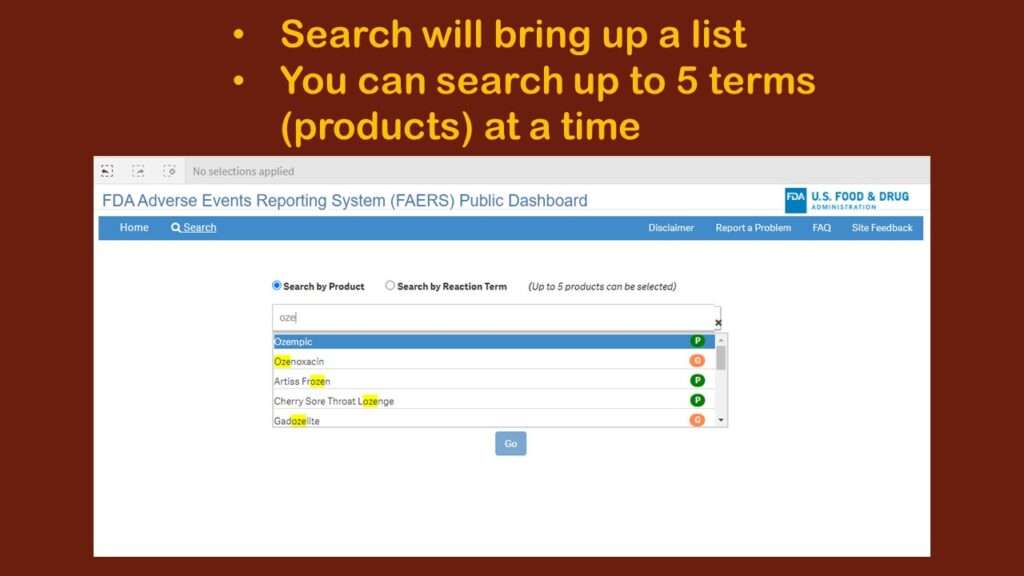

I was helping someone with an analysis of Ozempic, the trending diabetes/weight loss drug, so I looked to see what happened when I searched for that.

As you can see in the graphic, it was executing sort of a “smart” search, looking for any entry with “oze” in it. I immediately realized that this was fine for a very unique item like Ozempic. But what if I wanted to study adverse events associated with lipid-lowering drugs? There are many of them – much more than five, but the search only allows you to include five products.

Time Out for a Little Study Design Advice

Indeed, there are other ways to access FAERS data that might make it easier for the analyst to pick out all the lipid-lowering drugs. However, such a research question would not be easily answerable with this particular dashboard. So if you are just trying to do a portfolio project, you might want to select your topic carefully, based on what can reasonably be extrapolated from the data as they are served up in the dashboard (in a rather unclassified way).

It still needs to be scientifically relevant, but there are ways of using design to make your life easier. For example, you might find just three lipid-lowering drugs that are implicated, then compare the adverse event types between them. As I seem to always find myself recommending, do a quick search of Google Scholar and throw your results in Zotero before you go too far with your study design.

Filtering and Downloading FAERS Dashboard Data

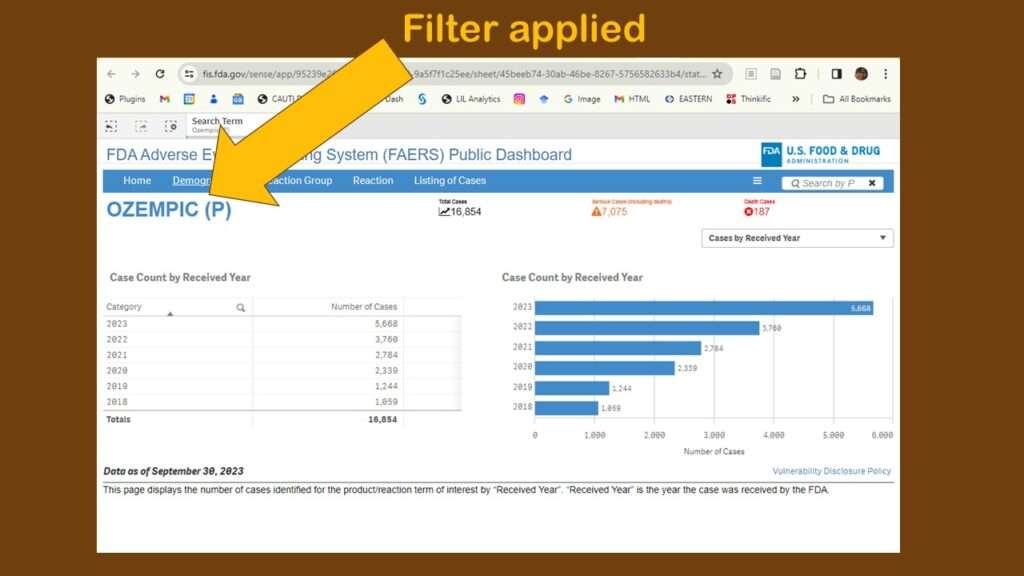

For this demonstration, I chose “Ozempic” from the dropdown in the search, and this brought me to this page (in the graphic). At first, I wasn’t sure anything had happened – but then I could see that a filter had been applied.

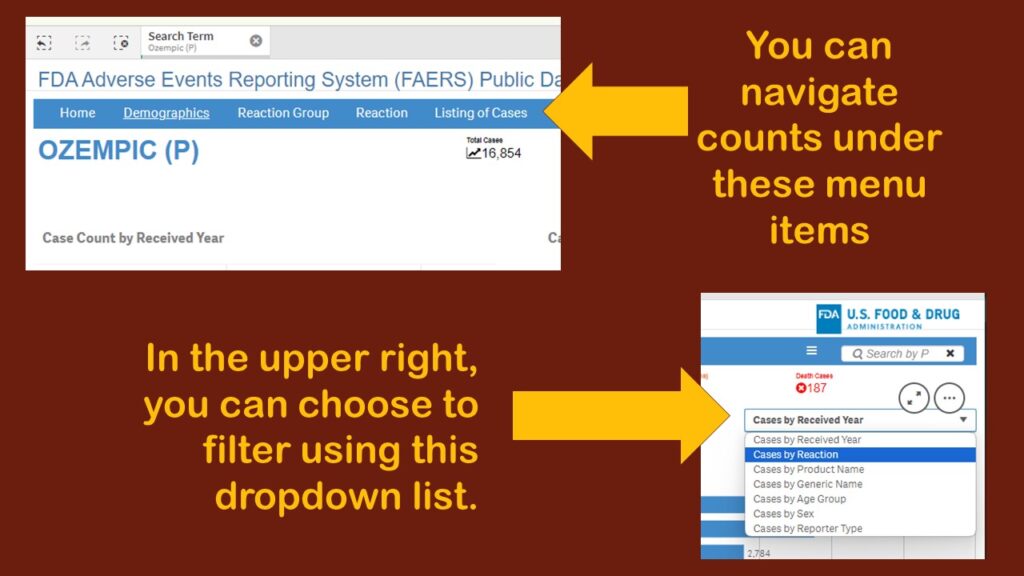

As you can see, the default display showed adverse events reports by year. Obviously, that’s not very interesting. Again, I was confused about menus. Where are they? And what do they do? I tried a few on the blue horizontal bar menu (where “search” had been), and I found different types of counts. I kept having to leave the dashboard, then retrace my steps to figure out what happened. It was very frustrating.

On the third or fourth time I retraced my steps to get to this page, I finally noticed a dropdown in the upper right corner. Although as you can see in the graphic, there are several choices, I chose “Cases by Reaction”, thinking that would be the obvious thing to examine in this database.

This produced a display more like what I had been expecting to see – where we have the frequencies by reaction for Ozempic.

Issues with Downloaded Data Structure and Format

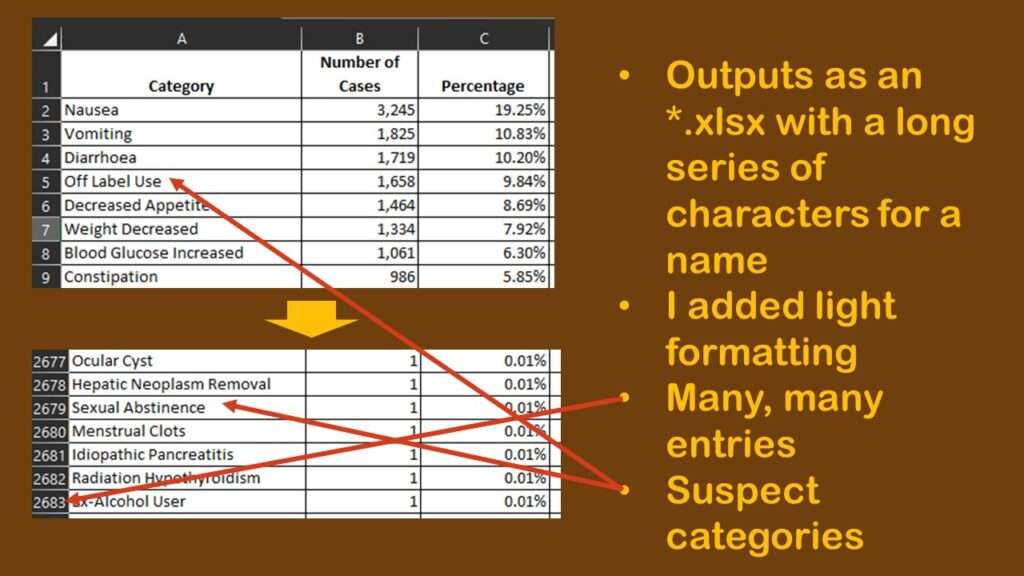

I was intrigued by the dataset that I downloaded. It was in *.xlsx format, and was named a long string of random characters. I opened it and lightly formatted it so it was easier to read, and made a graphic to describe my observations.

As shown in the graphic, to my chagrin, there were many rows – 2,682 to be exact. As a reminder, each row is a unique type of adverse reaction reported about Ozempic during the time period represented by the data. Each row has a “number of cases” count, so that means each one of those rows has at least one case in it. So just looking at the spreadsheet, we know we have 2,682 types of reactions to consider for Ozempic, but the total number of cases per type of reaction, and the distribution of cases per type of reaction is another thing we want to know as well.

However, that is going to be hard to figure out from the data, as you can see in the graphic. Nausea, the most prevalent adverse event in the dataset with 3,245 cases, makes up 19.25% of the reports. The second and third more prevalent adverse reactions are vomiting and diarrhea, which, like nausea, are also common adverse reactions to other medications.

But then we see the fourth most common so-called “adverse event” is “off-label use”. It is possible that off-label use caused an adverse event, but just the use of Ozempic off-label isn’t in itself an adverse event. Also, some of the entries are pretty suspect. How can “sexual abstinence” be caused by Ozempic?

Getting Creative with FAERS Data

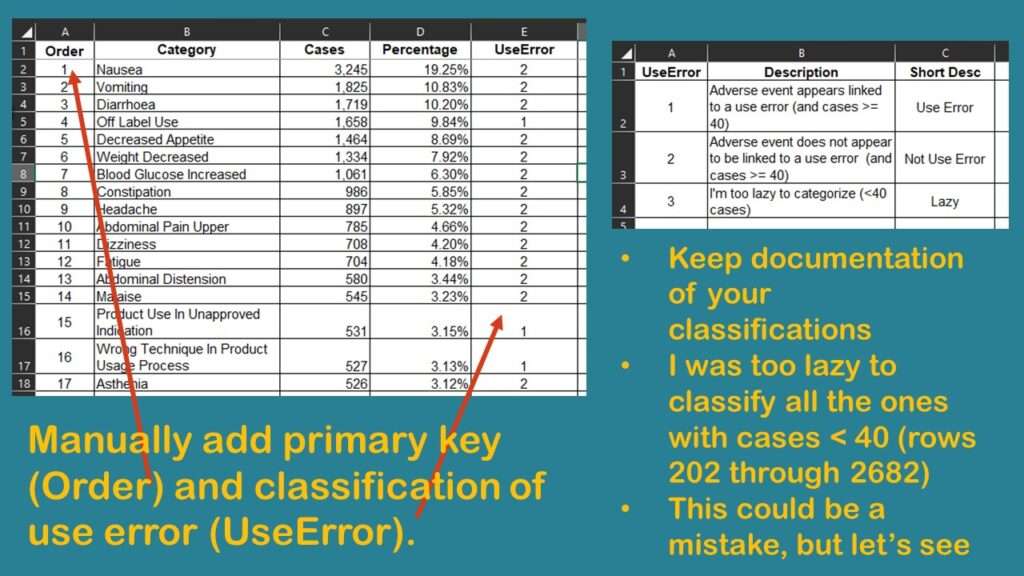

I study healthcare quality, and I was wondering if I could classify these different rows into whether or not there was a “use error”. I saved the dataset as “Download from web page_edited.xlsx” and started editing it. I manually added a row identifier (primary key) I called Order, because I populated it with a sequence. That way, I could remember what order the rows were originally in when I downloaded them.

Next, I added a column called UseError. As values, I wanted 1 to represent some sort of use error, and 2 to represent some other cause of an adverse event. UseError could refer to the off-label use and also include other health-quality-related errors, such as dosing errors, or incorrect administration.

As shown in the graphic, I found that the only way to correctly code UseError was for me to carefully read and manually code each row. Of course, I had to keep careful documentation of what I was doing in my coding approach.

I finally got down to rows for adverse events that were attributed to < 40 cases. That constituted about 2,400 rows (from row 202 to row 2,682). As the graphic shows, I was too lazy to code all those rows. I wondered if it mattered that I left them out, so I decided to do a quick analysis to see if I was on the right track.

Quick Analysis: How Important is it to Classify Every Single Row?

I decided to use R for this exercise, and you can download my code from GitHub here.

First, to read in the edited Excel file, I used the readxl package in R. I set the working directory, and then I imported the file using the read_excel command from the package. This command produces a tibble – a data format in R that I do not like to use – so I wrapped that command in a data.frame command to output a regular R dataframe. I named this dataframe ae_a. I also ran an nrow on it to see the number of rows.

Code:

ae_a <- data.frame(read_excel("Download from web page_edited.xlsx"))

nrow(ae_a)

Output:

[1] 2682

This checks out as exactly the number of rows we’d expect, so we feel pretty good about the import operation.

We know the total number rows – which represents all the different types of adverse events reported – but we do not know the total number of cases represented by these rows. I was curious about this, so I calculated the variable total_cases by summing the entire column Cases from the dataframe.

total_cases <- sum(ae_a$Cases)

The value of total_cases was 53,149. This means about 53,000 people reported one of these Ozempic adverse events in this dataset all told. But what worried me is that in my laziness, I was not classifying enough of the cases represented in the dataset.

To further examine the consequences of my choice to be lazy, I calculated three “numerators” that I could use with total_cases as the denominator. The first was total_useerrors. The value of this variable is the sum of the Cases column where UseError is set to 1 (as the earlier graphic documented).

total_useerrors <- sum(ae_a$Cases[ae_a$UseError == 1])

Next, I will calculate total_unclass, which is the sum of the Cases where UseError is set to 3 – these are the ones I did not categorize because I was lazy.

total_unclass <- sum(ae_a$Cases[ae_a$UseError == 3])

Finally, I will calculate total_nonuseerrors by summing the Cases where UseError is set to 2 – meaning they are adverse events with 40 or more cases that were not related to use error.

total_nonuseerrors <- total_cases - (total_useerrors + total_unclass)

To check my work, I used the identical command to compare the denominator, total_cases, with the three numerators added together.

Code: identical(total_cases, total_nonuseerrors + total_useerrors + total_unclass) Output: [1] TRUE

Because the identical command compared the two arguments and returned a TRUE, it means the two arguments are identical (which is equal in this case). So now, using these values, we can examine the proportion of cases that remained unclassified because I was too lazy to do it.

I want to use ggplot2 to make a pie chart like I do in this blog post. So to start out, I need to make a dataframe to feed the plot. I will use a trick I love to assemble the dataframe in R. I want the dataframe to have two variables: a description of the levels – Use Errors, Other Errors, and Unclassified – and the proportions of cases in each level. So, I will make a vector of each of these things, and then meld them together with a data.frame command.

Type <- c("Use Errors", "Other Errors", "Unclassified")

Proportion <- c(total_useerrors/total_cases,

total_nonuseerrors/total_cases,

total_unclass/total_cases)

plot_df <- data.frame(Type, Proportion)

As you can see, I made a dataframe called plot_df by joining together the vector named Type with the vector named Proportion. Type was just a character vector with a description of the three levels in it, while Proportion was a numerical vector where I used the variables I made to calculate each of the three proportions. I made sure to put the items in the vectors in the same order so the data were not incorrect when I fused the two vectors together into a dataframe.

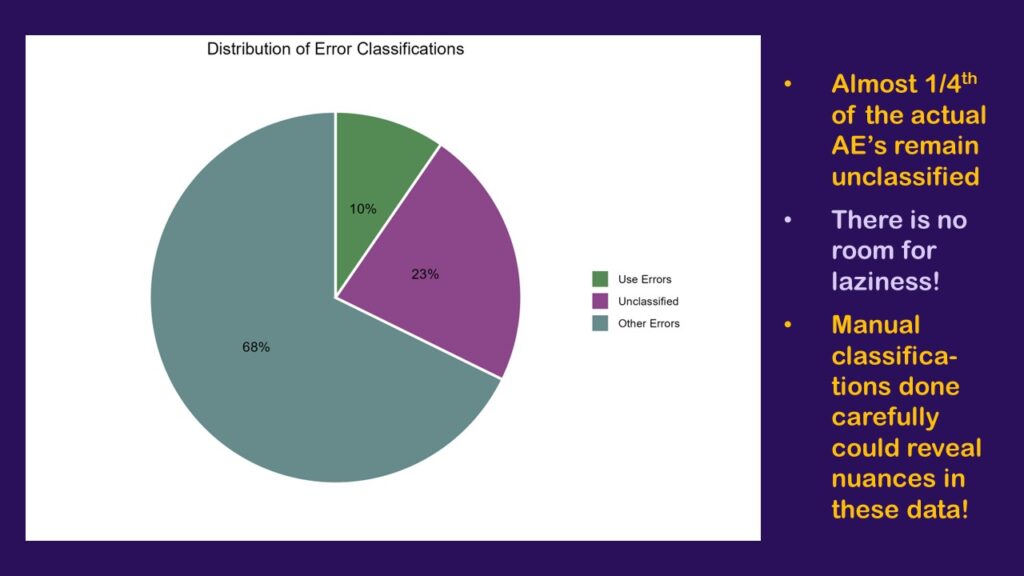

> plot_df

Type Proportion

1 Use Errors 0.09593784

2 Other Errors 0.67741632

3 Unclassified 0.22664584

Before making the plot, I decided to pick out three colors to use for the pie chart. I put them in a vector called pie_colors.

pie_colors <- c("paleturquoise4","orchid4",

"palegreen4")

Finally, it was time to call up the library ggplot2 and construct the code to create the pie chart, using pie_colors to color the chart.

library(ggplot2)

pie <- ggplot(plot_df, aes("", Proportion, fill = Type)) +

geom_bar(width = 1, size = 1, color = "white",

stat = "identity") +

coord_polar("y") +

geom_text(aes(label = paste0(round(Proportion*100,0), "%")),

position = position_stack(vjust = 0.5)) +

labs(x = NULL, y = NULL, fill = NULL,

title = "Distribution of Error Classifications") +

guides(fill = guide_legend(reverse = TRUE)) +

scale_fill_manual(values = pie_colors) +

theme_classic() +

theme(axis.line = element_blank(),

axis.text = element_blank(),

axis.ticks = element_blank(),

plot.title = element_text(hjust = 0.5,

color = "black"))

Also, I added a ggsave command to export the final figure as a *.png called “pie.png”.

ggsave(file = "pie.png",

units = c("in"),

width = 8,

height = 5.5,

dpi = 300,

pie)

FAERS Data Analysis: Don’t be Lazy…

The results of my little exploratory analysis provide strong evidence that it is a bad idea to get lazy with the FAERS data.

As can be seen here, by only bothering to classify the rows that contained 40 or more cases, I was only able to classify 78% of the dataset, which is a little over three-fourths. The orchid-colored pie slice (or should I say orchid4-colored?) represents all the cases from rows I was too lazy to classify. That’s 23% of the cases.

That’s no good. If we were really going to do this analysis and have it mean anything, I would really have to go through and classify many more of the rows – if not all. Otherwise, we are greatly limited in our interpretation. On one hand, we know that the largest percentage that could be use errors is the 10% we classified plus the 23% that are unclassified, which is 33% – a third!

…Be Creative!

On the other hand, we know that if the entire orchid slice was found to include none of the use errors, the smallest percentage the use errors could represent of all the cases is 10% – which seems high. If the floor is 10%, and the ceiling is 33% – that’s bad! That’s too high!

Of course, to do justice to this project would require much more work. I’d need to make sure whatever classification I did made sense as a “use error”. I might need to actually talk to a pharmacist and make sure I’m doing it right. Also, it’s important to look in the scientific literature to get a better idea of the issues around any adverse event topic you might be studying.

As can be seen by this demonstration using FAERS data exported from the public, online dashboard, you can do a relatively meaningful descriptive analysis with these data, even though post-market surveillance data are notoriously messy. You just need plan a strong descriptive study design, to do some manual work on the data, and aim to build upon the scientific literature when you report your results.

Published November 23, 2023. Added video January 19, 2024. Added another video January 30, 2024.

Read all of our data science blog posts!

FAERS data are like any post-market surveillance pharmacy data – notoriously messy. But if you apply strong study design skills and a scientific approach, you can use the FAERS online dashboard to obtain a dataset and develop an enlightening portfolio project. I show you how in my blog post!