End-to-end AI pipelines are not things that most academics learn about when studying for their master’s or doctoral degrees, especially in public health. However, almost everyone with a doctoral degree had to study statistics, and most graduate students have to use statistics in their theses or dissertations. So academics tend to be well-versed in statistics, and people with degrees in public health are specifically well-versed in biostatistics. In an end-to-end AI pipeline, statistics is an important component, so academics can make critical contributions to end-to-end AI pipelines.

End-to-end AI pipelines, however, involve artificial intelligence (AI) algorithms, which are mathematical things that are not routinely generated as part of doing a thesis or dissertation. This page from Intel provides some diagrams of various end-to-end AI pipelines, and you can see in these diagrams the part where the AI algorithm comes in. One could see these AI algorithms as higher-level statistics, or computational statistics; perhaps a better way to see these algorithms as higher-level mathematical equations. How ever you see AI algorithms, you are probably not going to be very good at making them if you don’t have a good command of both statistics and math. Some academics study developing AI on purpose in graduate school, but it is not widely studied, especially in public health.

This is why I was particularly intrigued by this commentary – rant? – I watched on YouTube from a person who apparently builds end-to-end AI pipelines, and was particularly frustrated with the academics who need to be called upon for statistical knowhow as part of the process.

Here is a quote from the video that I think sums up what he is trying to say:

In the real world, we don’t need any more pontificating statisticians. We need programmers with serious data skills that can understand and work the end-to-end machine learning pipeline.

End-to-End AI Pipeline: What Academics Are Missing

If you look at some of those diagrams on the Intel page, you will see that the basic problem being solved goes like this:

- We want to make a decision about something (e.g., what choice of videos to display on YouTube according to user preference)

- We import relevant live data from the production environment into a work area

- We transform the data in the work area so we can run the AI algorithm on it

- We run the algorithm, and we get the decision (e.g., here are the videos to display)

- We return the decision to the production environment where it can be acted upon.

The person in the video is basically complaining that academics seem to only be able to work on steps 2 through 4. They are actually necessary to develop step 4, so you can’t get rid of them. They are best at step 4, and not that good at steps 2 and 3, but can do them. In my opinion, most academics could not easily figure out what happens before we get to step 2, nor do they have a very good command on what happens after we figure out 4 and go forward.

The person in the video bemoans that academics have problems with “real world” data, but I don’t think that’s the issue. What I think academics are really missing from end-to-end AI pipeline development skills is a strong understanding of the production environment – how it got there, what it is doing, why the data are in it, and what users are trying to do with it.

In other words, I think all academics are missing is a comprehensive understanding of the production environment of which the AI pipeline is supposed to be a part. If that’s the case, then how do we solve this?

Example of an Academic Helping with End-to-End AI Pipeline

If you ever work on a data science team, you can learn from the other people on the team. Back in about 2006, I worked at a non-profit research institute funded through state funding that had a bad reputation for being poorly managed. Probably as evidence of this, they put me in the IT department to keep me out of the research department. In addition to me, we had a CIO, a web designer, a security engineer, an applications programmer, and a helpdesk technician.

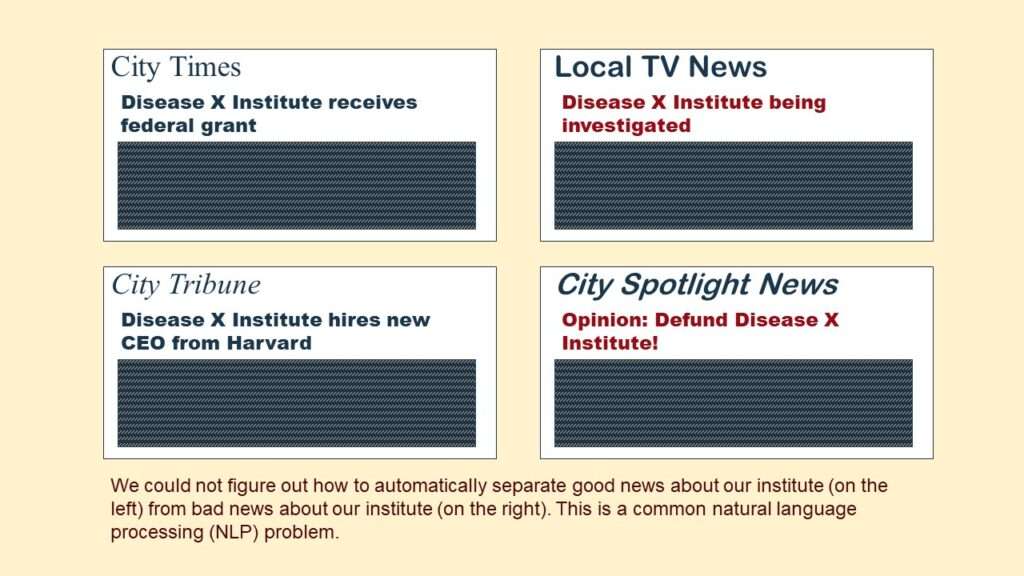

After developing our institute’s web page, we decided we wanted to have current relevant news display on the front of it dynamically. We tried setting up filters on Google News to see if we could filter in daily news that related to our institute’s mission (we were studying a particular disease), and specifically related to our institute. Unfortunately, due to our bad reputation, we saw a lot of bad news come in through the filter. Also, we realized that when some of the good news about our institute came through, we wanted it to linger longer on the web page than the other news.

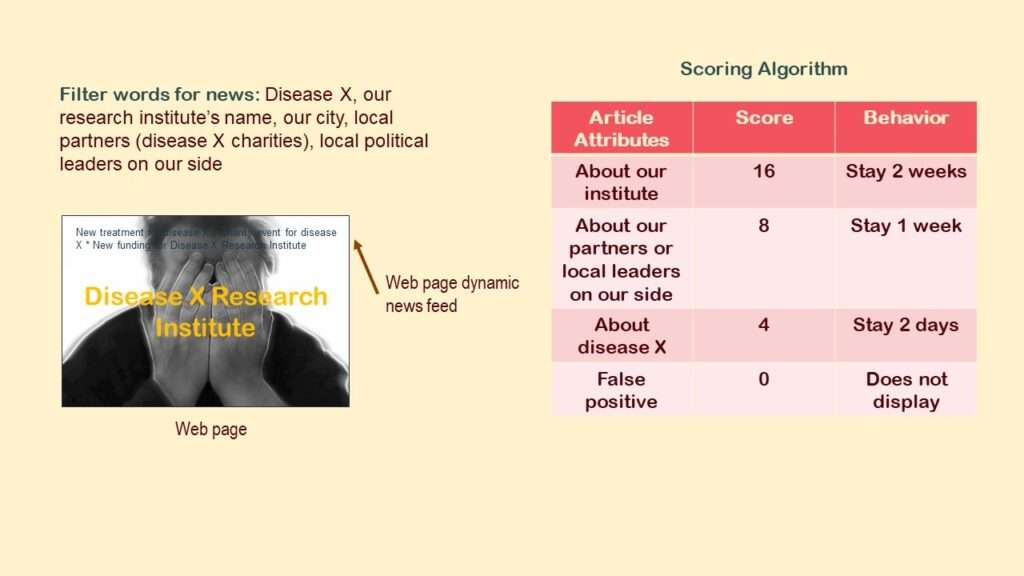

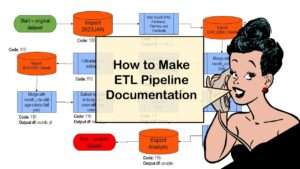

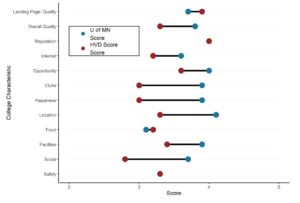

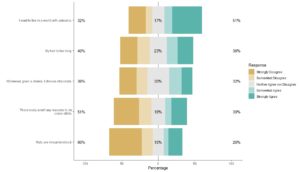

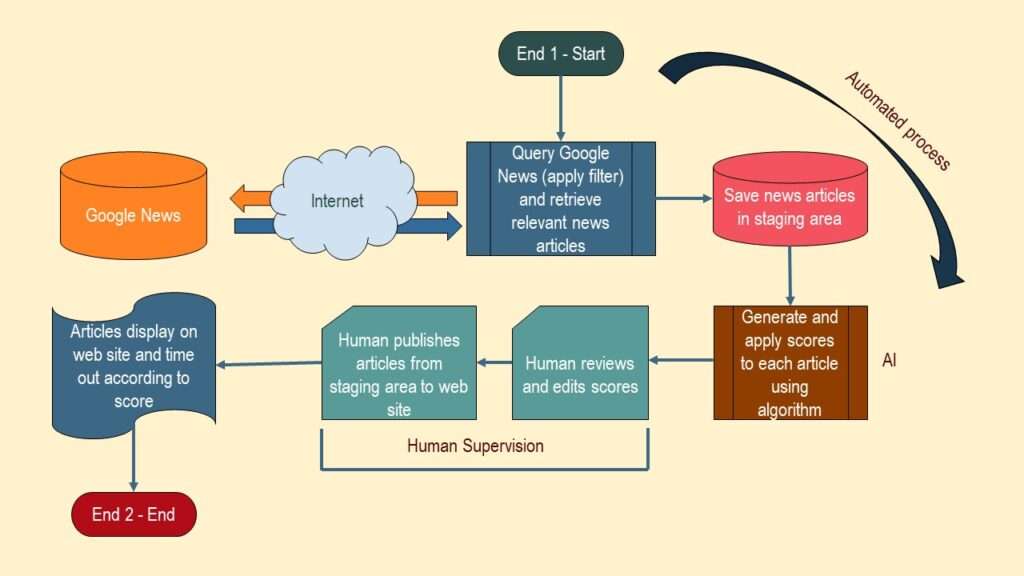

I sat with the applications programmer, and we reviewed the results from the Google News filters. Using what I had learned from developing epidemiologic risk scores, we came up with a scoring algorithm for the articles, as you can see in this graphic.

As you may observe, we wanted to import the articles from Google News that met the filter criteria, and then assign them scores so that the ones we really liked – about our research institute – would display on the web page the longest. The ones that were less relevant – about our disease of study in general – would display for the shortest amount of time. If we gave the score of 0 (or no score), it would not display.

We tested this system, and unfortunately, it couldn’t be automated. Why? Because we had a bad reputation.

Why We Could Not Automate the AI

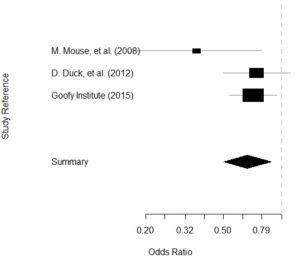

Unfortunately, our institute was in the news a lot for both good and bad reasons. This made it very difficult to tell between news about us we wanted to keep on the web site, and news about us we wanted to suppress (see graphic).

We’d often see bad news articles getting scored with the highest score – meaning to display on our web site the longest. The CIO, graphic designer, applications programmer and I discussed what to do about it. I suggested that we set up the system as a supervised rather than unsupervised system. Basically, before we allowed the articles to display on the web site, we’d have our helpdesk technician go in and look at the scores that were assigned the articles. That way, he could adjust the scores manually – and manually set all the “bad news” articles to 0 so they’d never display!

As you can see in the graphic, I labeled the part of the flow where the human – our helpdesk technician – manually adjusted the scores. You can also see where I labeled the ends – End 1 is the start, and End 2 is the end.

There you go! It’s an end-to-end AI pipeline! And it was designed with an academic on the team – me!

How Did an Academic Help Design an End-to-End AI Pipeline?

As you can see, I didn’t design it alone – we all designed it as part of a team. And the person in the video implied this – that he wanted academics to make better team members, so they can help with the whole pipeline. Clearly, all academics have to do is start functioning as part of one of these types of teams. What’s the problem?

The problem is silos in academia. Remember how I mentioned that I’d been banished to the IT department from the research department? This was meant to oppress me, but I took it as an opportunity to work on an IT team, which is basically how data science teams work. Our Harvard-trained CEO had cast me out of academia and into data science, and I just adapted.

I published an article on LinkedIn about how I have recently been advising a lot of academics to do what I did, and just start serving on a data science team. I call it the “sink or swim” approach to gaining data science teamwork culture. There are a few critical problems with my advice, I have found.

- Some academics do not have data science teams in their environment into which they could integrate.

- Others have teams around, but there are structural barriers (mainly reporting lines) that prevent them from trying to serve on a team.

- Many academics are just plain intimidated about trying to integrate into a data science team without any special computer science training or background.

End-to-End AI Pipelines: How to Be an Academic Expert

Are you a professional experienced in academic healthcare or public health who wants to become an expert at designing end-to-end AI pipelines? Do you want to try doing projects like the one I did with my IT department? Then read about my “Public Health to Data Science Rebrand” mentoring program, and see if it’s right for you!

Updated November 25, 2022. Revised banners June 18, 2023.

Read all of our data science blog posts!

End-to-end AI pipelines are being created routinely in industry, and one complaint is that academics can only contribute to one component of the pipeline. Really? Read my blog post for an alternative viewpoint!