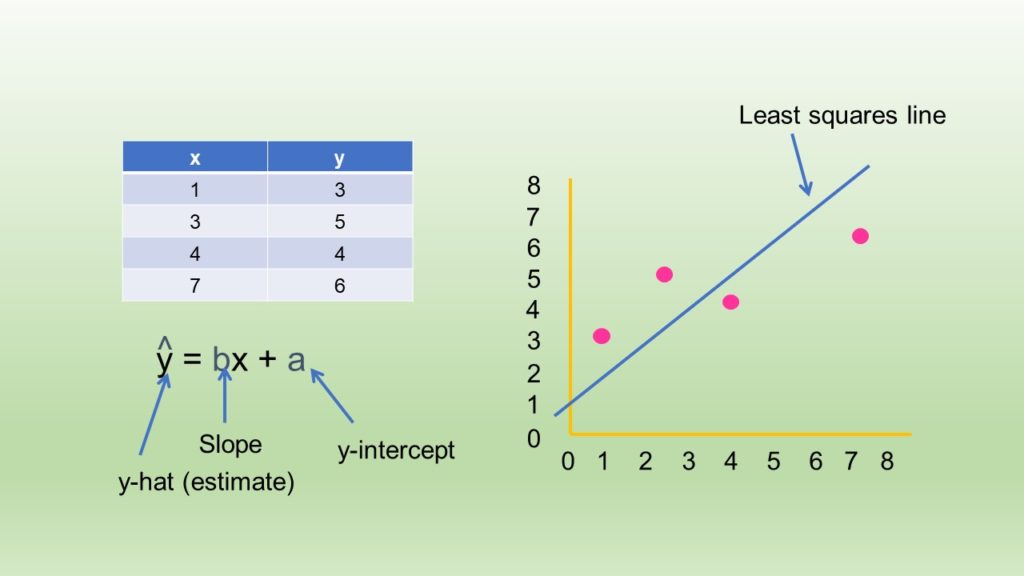

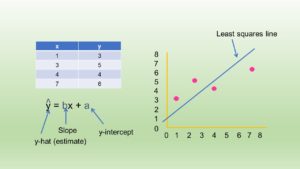

During my failed attempt to get a PhD from the University of South Florida, my doctor friend asked me one day to build a linear regression model using a small dataset he had collected from a lab. He had measurements of these “new” chemical messengers called cytokines – so that definitely dates this story! I was supposed to use characteristics of the patients as predictors so we could see if any particular patient characteristics were associated with levels of any particular cytokine.

I remember making a model, and wondering if it was right. When I put all the covariates in, most of them were not significant. If I removed the not-significant ones, the other ones got more significant – very suspicious! Then I added interactions. Then I called my professor and asked what to do.

I’ll cut to the chase: she didn’t know what to do, either.

But she did tell me what everyone is essentially supposed to do. First, we are supposed to set up the statistical test before gathering the data. Yeah, good luck with that when dealing with real-life doctors and real-life labs. Next, before we start modeling, we create a set of rules about our model that need to be met for us to accept and select the final model. These are called “model specifications”.

Challenges with Model Specification for Regression Modeling

Jim Frost writes on his blog “Statistics by Jim” quite comprehensively about specifying models. He goes over different ways of making up these rules. For example, for linear regression models, you can pick the one with the best r-squared. Or you can just throw out all the variables with slopes with non-significant p-values.

But what’s especially awesome about Statistics by Jim’s post is that after he talks about how to make the specifications, he puts in a section titled, “Real World Complications in the Model Specification Process”. I’ll paraphrase what he says:

Sometimes the data do not behave, and other times, they behave downright badly!

…so the best laid plans can often go wrong in practice. You might feel tension between different specifications. What if two very different models have the exact same r-squared? Also, Jim points out that there are different kinds of r-squareds, so which one do you choose? What if there are two “sibling” variables who are both significant when together in a model, but ruin the rest of the model by making everything else non-significant? But you can’t just put in one or the other, because neither sibling is significant alone. This is an example of collinearity.

In other words, in real life, regression modeling can be hairy.

What to Do? Stepwise, Stepwise, Stepwise!

Over time, we’ve come to a consensus that “stepwise” model specification is a good idea. This means setting up our model specification in steps: first we do this, then we do that, then we do this other thing. It can help us figure out what covariates to keep in the model by giving us more decision points so we can filter in a batch, then filter that batch into the next batch. Much more organized, and easier to document. So that’s the good news.

Over time, we’ve come to a consensus that “stepwise” model specification is a good idea. This means setting up our model specification in steps: first we do this, then we do that, then we do this other thing. It can help us figure out what covariates to keep in the model by giving us more decision points so we can filter in a batch, then filter that batch into the next batch. Much more organized, and easier to document. So that’s the good news.

The bad news is that there are different kinds of stepwise modeling, and we can’t agree on what the best one is – or even what to call each of them. I used to say what I did was “forward stepwise” modeling – that’s what I was told it was in college. In fact, that’s what I called it in when demonstrating it in my LinkedIn Learning R course on regression.

Then, later, when I was writing this book on descriptive and regression analysis in R and SAS, one of the really helpful peer-reviewers alerted me to this awesome article that actually tries three different logistic regression modeling approaches on the same dataset and compares the answers.

But the problem is that the authors use the term “forward stepwise” to mean something that I didn’t mean in my R course. This worried me, and then I asked around and found that some people do call what I did in the R course “forward stepwise”, and some call it “ambi-directional stepwise” – which, sorry, is just too too weird for me.

Another popular approach is called “backward stepwise” (also unfortunately called “backward elimination”), and the authors tested that one in their article, along with their version of “forward stepwise”, and the approach I actually use – which I guess I will just call “stepwise selection”. That’s the approach I’ve been using since being coached by my professor on that project, and it’s the one I teach to my customers and in my courses. So now I started calling it that.

Okay Great, Stepwise Selection is Best. How Do I Learn it?

If you are an R person, you can take my R course on LinkedIn Learning, and if you are a SAS person, you can take my SAS course. But if you are more of a manager type – or you just want to learn how to do model specification without actually doing any programming – take my big data study design course on LinkedIn Learning. I teach practical model specification that you can apply with real world data…

…even when data behave badly!

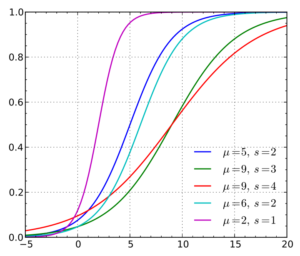

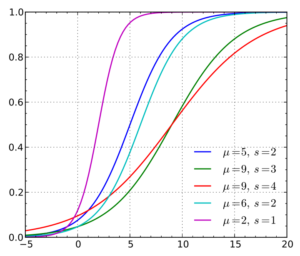

Rainbow logistic regression plot by Krishnavedala

Updated August 24, 2019. Some reformatting and slider added April 3, 2022.

Read all of our data science blog posts!

Confidence intervals (CIs) help you get a solid estimate for the true population measure. Read [...]

Continuous variable categorized can open up a world of possibilities for analysis. Read about it [...]

Delete if rows meet a certain criteria is a common approach to paring down a [...]

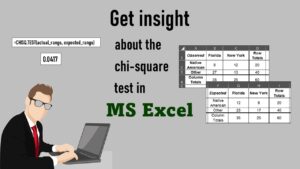

Chi-square test is hard to grasp – but doing it in Microsoft Excel can give [...]

Identify elements in research reports, and you’ll be able to understand them much more easily. [...]

Time periods are important when creating a time series visualization that actually speaks to you! [...]

Apply weights to get weighted proportions and counts! Read my blog post to learn how [...]

Make categorical variables by cutting up continuous ones. But where to put the boundaries? Get [...]

Remove rows by criteria is a common ETL operation – and my blog post shows [...]

CDC Wonder is an online query portal that serves as a gateway to many government [...]

AI careers are not easy to navigate. Read my blog post for foolproof advice for [...]

Descriptive analysis of Black Friday Death Count Database provides an example of how creative classification [...]

Classification crosswalks are easy to make, and can help you reduce cardinality in categorical variables, [...]

FAERS data are like any post-market surveillance pharmacy data – notoriously messy. But if you [...]

Dataset source documentation is good to keep when you are doing an analysis with data [...]

Joins in base R must be executed properly or you will lose data. Read my [...]

NHANES data piqued your interest? It’s not all sunshine and roses. Read my blog post [...]

Color in visualizations of data curation and other data science documentation can be used to [...]

Defaults in PowerPoint are set up for slides – not data visualizations. Read my blog [...]

Text and arrows in dataviz, if used wisely, can help your audience understand something very [...]

Shapes and images in dataviz, if chosen wisely, can greatly enhance the communicative value of [...]

Table editing in R is easier than in SAS, because you can refer to columns, [...]

R for logistic regression in health data analytics is a reasonable choice, if you know [...]

Connecting SAS to other applications is often necessary, and there are many ways to do [...]

Portfolio project examples are sometimes needed for newbies in data science who are looking to [...]

Project management terminology is often used around epidemiologists, biostatisticians, and health data scientists, and it’s [...]

“Rapid application development” (RAD) refers to an approach to designing and developing computer applications. In [...]

Understanding legacy data is necessary if you want to analyze datasets that are extracted from [...]

Front-end decisions are made when applications are designed. They are even made when you design [...]

Reducing query cost is especially important in SAS – but do you know how to [...]

Curated datasets are useful to know about if you want to do a data science [...]

Statistics trivia for data scientists will refresh your memory from the courses you’ve taken – [...]

Management tips for data scientists can be used by anyone – at work and in [...]

REDCap mess happens often in research shops, and it’s an analysis showstopper! Read my blog [...]

GitHub beginners – even in data science – often feel intimidated when starting their GitHub [...]

ETL pipeline documentation is great for team communication as well as data stewardship! Read my [...]

Benchmarking runtime is different in SAS compared to other programs, where you have to request [...]

End-to-end AI pipelines are being created routinely in industry, and one complaint is that academics [...]

Referring to columns in R can be done using both number and field name syntax. [...]

The paste command in R is used to concatenate strings. You can leverage the paste [...]

Recoloring plots in R? Want to learn how to use an image to inspire R [...]

Adding error bars to ggplot2 in R plots is easiest if you include the width [...]

“AI on the edge” was a new term for me that I learned from Marc [...]

Pie chart ggplot style is surprisingly hard to make, mainly because ggplot2 did not give [...]

Time series plots in R are totally customizable using the ggplot2 package, and can come [...]

Data curation solution that I posted recently with my blog post showing how to do [...]

Making upset plots with R package UpSetR is an easy way to visualize patterns of [...]

Making box plots in R affords you many different approaches and features. My blog post [...]

Convert CSV to RDS is what you want to do if you are working with [...]

GPower case example shows a use-case where we needed to select an outcome measure for [...]

Querying the GHDx database is challenging because of its difficult user interface, but mastering it [...]

Variable names in SAS and R are subject to different “rules and regulations”, and these [...]

Referring to variables in processing is different conceptually when thinking about SAS compared to R. [...]

Counting rows in SAS and R is approached differently, because the two programs process data [...]

Native formats in SAS and R of data objects have different qualities – and there [...]

Looking for a SAS-R integration example that uses the best of both worlds? I show [...]

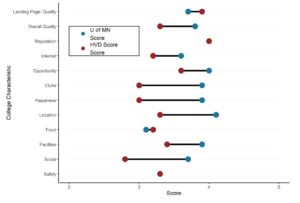

Want to compare multiple rankings on two competing items – like hotels, restaurants, or colleges? [...]

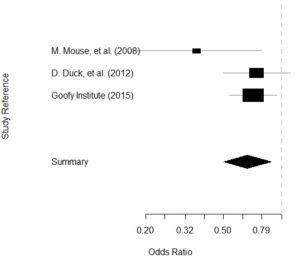

Getting data for meta-analysis together can be challenging, so I walk you through the simple [...]

Get to know three of my favorite SAS documentation pages: the one with sort order, [...]

I use the datasets from the Behavioral Risk Factor Surveillance Survey (BRFSS) to demonstrate in [...]

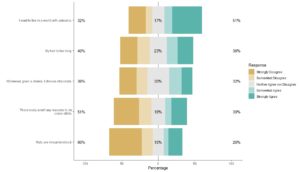

I love the Likert package in R, and use it often to visualize data. The [...]

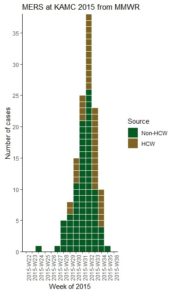

With all this talk about “flattening the curve” of the coronavirus, I thought I would [...]

During my failed attempt to get a PhD from the University of South Florida, my [...]

Trying to decide what independent variables belong in a regression can be daunting. My blog post shows you the evidence-based way I make modeling decisions about keeping independent variables.

Over time, we’ve come to a consensus that “stepwise” model specification is a good idea. This means setting up our model specification in steps: first we do this, then we do that, then we do this other thing. It can help us figure out what covariates to keep in the model by giving us more decision points so we can filter in a batch, then filter that batch into the next batch. Much more organized, and easier to document. So that’s the good news.

Over time, we’ve come to a consensus that “stepwise” model specification is a good idea. This means setting up our model specification in steps: first we do this, then we do that, then we do this other thing. It can help us figure out what covariates to keep in the model by giving us more decision points so we can filter in a batch, then filter that batch into the next batch. Much more organized, and easier to document. So that’s the good news.