Snowflake is a new big data cloud back-end solution, and the company has been holding “Data for Breakfast” meetings in many different cities to introduce data scientists to their product. I went to the meeting held on March 4, 2020 at the Marriott Long Wharf in Boston, and here is my review.

Not My First “Data for Breakfast”

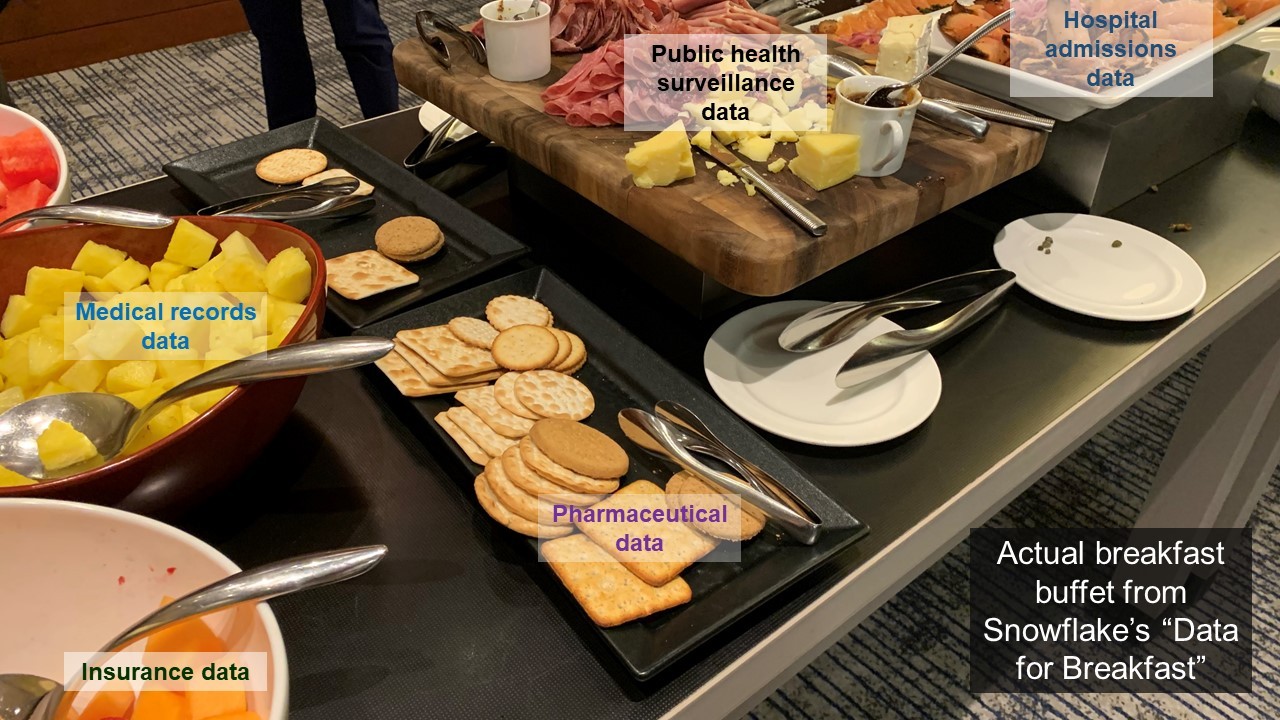

Actually, I had attended this exact same Snowflake “Data for Breakfast” a few months before, but I didn’t post on my blog because I had nothing to report, as the meeting suffered numerous technical issues and was effectively derailed. The agenda of that meeting had been similar to this one: We start at 8:15 am, where we get time to eat a lovely free breakfast and network with others. Then, we scurry to our seats with our coffee at 9:00 am to watch a few speakers and a panel until 11:00 am, when we can stay for an optional workshop.

Actually, I had attended this exact same Snowflake “Data for Breakfast” a few months before, but I didn’t post on my blog because I had nothing to report, as the meeting suffered numerous technical issues and was effectively derailed. The agenda of that meeting had been similar to this one: We start at 8:15 am, where we get time to eat a lovely free breakfast and network with others. Then, we scurry to our seats with our coffee at 9:00 am to watch a few speakers and a panel until 11:00 am, when we can stay for an optional workshop.

Our 9:00 am speaker mentioned that this was their fourth Data for Breakfast in Boston, so I guess I attended the third one previously. What’s great about these Data for Breakfast meetings (besides the breakfast!) is they give you a free trial account for Snowflake, and then you can you actually use it and see what it’s like. What didn’t go so well the last time I attended is that no one could get their free trial account working. Actually, that’s why I came back – I didn’t get a chance to actually try it last time.

Better Speakers at this “Data for Breakfast”

The speakers at the last one I attended were so forgettable that I forgot them. This time was different. They started with two speakers from Snowflake, followed by a speaker from one of their customer companies, Data Robot. The Snowflake presenters were upbeat, but all they did was show a slide deck of crazy conceptual diagrams that really didn’t speak to me. It was kind of like a Snowflake data rally, rather than an informative lecture. What I did find informative were their case studies, and I will focus specifically on the one about McKesson, because that company has a ton a healthcare data.

Representative from Snowflake Customer Data Robot was Impressive

Lisa (I didn’t catch her last name) from Data Robot presented, and she was dynamite. She started by asking how many people were “actively” doing artificial intelligence (AI) or machine learning for their organization. I was surprised that there were very few hands raised, since AI and machine learning are all the rage.

Then she went on to point out how important it is that you vet your AI approach – especially if you use a platform like Data Robot’s. You have to care about how well you can “trust” the platform – meaning how well you can use the platform to deal with the limits of AI, how well the platform allows you to robustly develop and deploy evidence-based AI, and how well the platform allows you to continuously monitor your AI and maintain it to ensure it does not break.

I’m falling in love with Data Robot because they are so into data stewardship, data curation, evidence-based AI, and just good data practices. I just posted recently about a podcast where one of their data scientists was interviewed about IBM Watson. The scientists at Data Robot realize that bias, lack of training data, and other hobgoblins that creep into AI are as serious as a heart attack. They see information and data literacy as something that everyone involved in the project – partners, employees, even non-data participants – should have. We all need to respect the data, and know the ethics.

Lisa emphasized that you need to operationalize and monitor your algorithms – but only a small percentage are monitored, even though many decisions are being made every day based on these AI algorithms. She pointed out that the reason that people are not monitoring their AI is not necessarily because they don’t want to, but because there is so much data – and so much disparate data – involved in AI algorithms, making monitoring challenging. Remember McKesson’s 60 data silos? As Lisa put it,

“You need a buffet of data” for AI.

Lisa’s talk helped me better understand not only what Snowflake does, but what Data Robot does.

My Recommendation: Check out Snowflake’s Data for Breakfast Meeting if You Can

I’m glad I didn’t publicly evaluate the Snowflake Data for Breakfast meeting on the basis of my experience the first time I came, because they really improved this time. Here are the changes I saw:

- When I signed up for my free trial account, the e-mail to verify it actually came. Aside for initially flaky internet, there were no more technical problems.

- The presentations made a few important points clearer about Snowflake. One is that it is SQL-based, and another is that it runs on Microsoft Azure, AWS, and Google Cloud Computing Services (GCP). Therefore, your Snowflake implementations are only as “private and confidential” as Azure, AWS, and GCP.

- Unlike last time, there were extension cords set out so there was some hope of plugging in a laptop. However, they were in an inconvenient spot, so I still found myself sitting on the floor and threatening to trip someone.

- This time, the event was much more organized. Even though the last meeting had roughly the same format, it seemed chaotic for some reason. This one was much calmer, and it was clearer who was from Snowflake so you could ask questions.

- The lab was very well organized – at least on paper. The written exercise lab book is unbelievably well-written, and the environment is well set up. The lab walks you through two different data examples, and I did the first one myself while listening to the speakers.

- The Data Robot speaker was fantastic! It gave me a chance to better understand how Snowflake can be applied in a use case.

The participants in this meeting will have an excellent breakfast and a worthy experience demoing the platform. My recommendation to Snowflake is that I think they should do the lab first, then the talking part. The talk seems esoteric without having an applied experience first. I’m drawing on my knowledge of deeper learning, and also, the audience: We are all data scientists in the room, so we are all “applied learners”. It will make more sense for us to hear the lectures after we see how the product works.

This is probably why I found I learned more on the second time I attended. They apparently let you attend as many times as you want, so if you still have questions after your first meeting and you have the opportunity, go again!

Updated March 4, 2020.